Modern software development already relies on AI coding assistants that react to user inputs. Although autonomous AI agents are rapidly developing, they have the potential to revolutionize the field even further. We talk about:

- handling tasks without prior strict rules,

- detecting anomalies,

- predicting and mitigating potential issues before they arise,

- providing valuable insights to novice and experienced devs.

These results can be achieved with intelligent, adaptive AI agents that enhance system resilience and accelerate project timelines.

In this guide, we'll explore AI agents, provide examples and show you how to create your own AI agent with n8n, a source-available AI-native workflow automation tool!

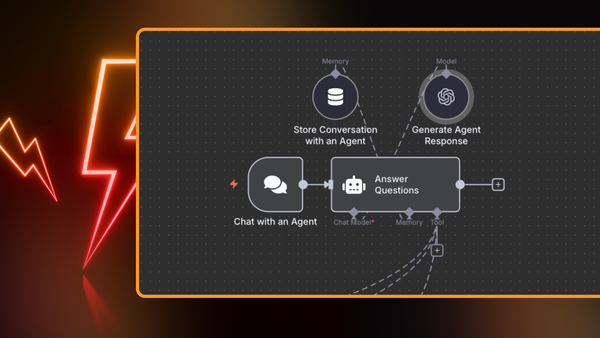

This is a sneak peek of workflows with AI agents that you'll be able to build by the end of this guide:

Let’s dive in!

Table of content

- What are AI agents?

- How do AI agents work?

- What are the types of agents in AI?

- What are the benefits of AI agents?

- What are the key components of an AI agent?

- What are the AI agent examples?

- How to create an AI agent?

- FAQ

- Wrap up

- What’s next?

What are AI agents?

An AI agent is an autonomous system that receives data, makes rational decisions, and acts within its environment to achieve specific goals.

While a simple agent perceives its environment through sensors and acts on it through actuators, a true AI agent includes a "reasoning engine". This engine autonomously makes rational decisions based on the environment and its actions. According to AIMA: “For each possible percept sequence, a rational agent should select an action that is expected to maximize its performance measure, given the evidence provided by the percept sequence and whatever built-in knowledge the agent has”.

Large Language Models and multimodal LLMs are at the core of modern AI agents as they provide a reasoning layer and can readily measure performance. Check the FAQ section for details.

The most advanced AI agents can also learn and adapt their behavior over time. Not all agents need this, but sometimes it’s mandatory:

This Atlas robot needs a little bit more practice. Source: https://www.youtube.com/watch?v=EezdinoG4mk

How do AI agents work?

Software AI agents operate through a combination of perception, reasoning and action. At their core, they use Large Language Models (LLMs) to understand inputs and make decisions, but the real power comes from the interaction of these elements:

Input processing

The agent receives information through various channels - direct user questions, system events, or data from external sources. We’ll explore different input types when discussing agent sensors later in this article.

Decision-making

Unlike simple chatbots, AI agents use multi-step prompting techniques to make decisions. Through chains of specialized prompts (reasoning, tool selection), agents can handle complex scenarios that are not possible with single-shot responses. We’ll examine this “reasoning engine” in detail in the components section [anchor link to the relevant section].

Action execution

Modern LLMs generate structured outputs that serve as function calls to external systems. For a detailed look at how agents interact with external services and handle feedback loops, check our guide on building AI agentic workflows.

Learning and adaptation

Some agents can improve over time through various mechanisms - from simple feedback loops to sophisticated model updates. We’ll explore these learning approaches in detail in the FAQ section.

The exact implementation of these components depends on the purpose and complexity of the agent, as you’ll see in our examples of both human-activated and event-activated agents.

What are the types of agents in AI?

The AIMA textbook discusses several main types of agent programs based on their capabilities:

Simple reflex agents

These agents are fairly straightforward - they make decisions based only on what they perceive at the moment, without considering the past. They do their job when the right decision can be made just by looking at the current situation.

Model-based reflex agents

Model-based reflex agents are a little more sophisticated. They keep track of what's happening behind the scenes, even if they can't observe it directly. They use a "transition model" to update their understanding of the world based on what they've seen before, and a "sensor model" to translate that understanding into what's actually happening around them.

Goal-based agents

Goal-based agents are all about achieving a specific goal. They think ahead and plan a sequence of actions to reach their desired outcome. It's if they have a map and are trying to find the best route to their destination.

Utility-based agents

These ones are even more advanced. They assign a "goodness" score to each possible state based on a utility function. They not only focus on a single goal but also take into account factors like uncertainty, conflicting goals and the relative importance of each goal. They choose actions that maximize their expected utility, much like a superhero trying to save the day while minimizing collateral damage.

Learning agents

Learning agents are the ultimate adaptors. They start with a basic set of knowledge and skills, but constantly improve based on their experiences. They have a learning element that receives feedback from a critic who tells them how well they're doing. The learning element then tweaks the agent's performance to do better next time. It's like having a built-in coach that helps the agent perform their task better and better over time.

These theoretical concepts are great for understanding the basics of AI agents, but modern software agents powered by LLMs are like a mashup of all these types. LLMs can juggle multiple tasks, plan for the future, and even estimate how useful different actions might be.

Let’s find out if there are any documented benefits of this approach.

What are the benefits of AI agents?

To understand the real impact of AI agents, we looked at LangChain’s recent State of AI Agents report. This survey of more than 1,300 professionals across a variety of industries found that 51% of companies are already using AI agents in production, with adoption rates similar across both tech and non-tech sectors.

This isn’t just hype – these are documented benefits that companies are already seeing from employing AI agents:

Faster information analysis and decision-making

Handle large amounts of data, extract key insights and create summaries. This frees professionals from time-consuming research tasks and helps teams make data-driven decisions faster.

Increased team productivity

Automate routine tasks, manage schedules and optimise workflows. Teams report that they have more time for creative and strategic work when agents are busy with administrative tasks.

Enhanced customer experience

Speed up response times, handle basic inquiries and 24/7 support. This improves customer satisfaction and reduces the burden on support teams.

Accelerated software development

Help with coding tasks, debugging and documentation. This speeds up development cycles and helps maintain code quality.

Improved data quality and consistency

Automatically process and enrich data, ensure consistency and reduce manual data entry errors.

Despite all these benefits, often leading to an increased cost-efficiency, implementing AI agents requires an understanding of their core components and how they work together. Let's take a closer look!

What are the key components of an AI agent?

In essence, an AI agent collects data with sensors, comes up with rational solutions using a reasoning engine, performs actions with actuators and learns from mistakes through its learning system. But what does this process look like in detail?

Let's break down the steps of an LLM-powered software agent.

Sensors

Information about the environment usually comes in the form of text information. This can be:

- Plain natural language text like a user query or question;

- Semi-structured information, such Markdown or Wiki formatted text;

- Various diagrams or graphs in text format, such as Mermaid flowcharts;

- More structured text as a JSON object or in tabular form, log streams, time series data;

- Code snippets or even complete programs in many programming languages;

Multimodal LLMs can receive images or even audio data as input.

Actuators

Most language models can only produce textual output. However, this output can be in a structured format such as XML, JSON, short snippets of code or even complete API calls with all query and body parameters.

It’s now the developer's job to feed the outputs from LLMs into other systems (i.e. make an actual API call or run an n8n workflow).

Action results can go back into the model to provide feedback and update the information about the environment.

Reasoning engine (aka the "brain")

The "brain" of an LLM-powered AI agent is, well, a large language model itself. It makes rational decisions based on goals to maximize a certain performance. When necessary, the reasoning engine receives feedback from the environment, self-controls and adapts its actions.

But how exactly does it work?

Giant pre-trained models such as GPT-4, Claude 3.5, Llama 3 and many others have a "baked in'' understanding of the world they have gained from piles of data during training. Multimodal LLMs such as GPT-4o go further and get not only text, but also images, audio and even video data for training. Further fine-tuning allows these models to perform better at specific tasks.

What are the boundaries of those tasks is largely an area of ongoing research, but we already know that large LLMs are able to:

- follow instructions,

- analyze visual and audio inputs,

- imitate human-like reasoning,

- understand the implied intent just from the user commands (known as prompts),

- provide replies in a structured way, which allows direct connections of LLMs to external systems (via function or API calling).

All that remains is the final step: how to build a series (or chains) of prompts so that LLM can simulate autonomous behavior.

And this is exactly where LangChain comes into play!

What are the AI agent examples?

Based on LangChain’s State of AI Agents report and recent developments in the field, there are two main approaches to implementing AI agents: human-activated and event-activated (ambient) agents.

Let’s look at examples of both.

Human-activated agents

These agents respond to direct human input via chat interfaces or structured commands:

- Research agents (like Perplexity)

Process user questions to search, analyze and synthesize information from multiple sources, maintaining context across conversations.

- Customer service agents

Handle customer inquiries, maintain conversation context, and make decisions about when to escalate to human agents.

- Development assistants (like aider)

AI pair programming agents that understand codebases, suggest improvements, and help developers write better code faster.

Event-activated (ambient) agents

These agents work in the background, responding to events and system triggers without direct human intervention:

- Email management agents

Monitor inboxes, draft responses and flag important messages for human review.

- Security monitoring agents

Review system logs, detect anomalies and alert teams about potential issues.

- Data quality agents

Continuously check incoming data, enforce consistency rules and flag anomalies.

For those interested in exploring advanced AI agent frameworks, several projects provide additional opportunities:

- LangChain for building complex agent workflows;

- BabyAGI (paper, GitHub) for task management and planning;

- AgentGPT for deploying agents via a browser.

As we've seen, AI agents come in two main types: those that respond to direct human input and those that work autonomously in the background. Both approaches have their place in modern automation strategies, and the choice depends on your specific needs.

How to create an AI agent?

Now that we’ve covered key AI agents theory concepts, it’s time to build one.

Before you begin, define the purpose and key components of an agent, including an LLM, memory, and reasoning capabilities.

Choose a framework such as LangChain or LlamaIndex to integrate RAG, set up APIs and execution logic. Finally, optimize the agent with feedback loops, monitoring, and fine-tuning to improve its performance over time.

Why use LangChain for AI agents?

In the context of AI agents, LangChain is a framework that lets you leverage large language models (LLMs) to design and build these agents.

Traditionally, you’d program in code the whole sequence of actions an agent takes.

LangChain simplifies this process by providing prompt templates and tools that an agent gets access to. An LLM acts as the reasoning engine behind your agent and decides what actions to take and in which order. LangChain hides the complexity of this decision making behind its own API. Note that this is not a REST API, but rather an internal API designed specifically for interacting with these models to streamline agent development.

This simple conversation agent uses window buffer memory and a tool for making Google search requests. With n8n you can easily swap Language Models, provide different types of chat memory and add extra tools.

Building smart: Create your own intelligent data analyst AI agent with n8n

Let’s build a practical example now: an intelligent data analyst agent that helps users get insights from a database using natural language.

Instead of overloading the LLM context window with raw data, our agent will use SQL to efficiently query the database - just like human analysts do. This approach combines the best of both worlds: users can ask questions in plain English, while the agent handles the technical complexities of interacting with the database behind the scenes.

In this example, we use the sample of a customer database, but the same principles apply to any business data you might have. Our agent will help answer questions like “What are our top-selling products?” or “What are the sales trends by region?” without requiring users to know SQL.

To create a workflow, sign up for a cloud n8n account or self-host your own n8n instance.

Once you are in, find the page with the template and click “Use workflow”. Alternatively, create a workflow from scratch.

Step 1. Download and save SQLite file

The top part of the workflow starts with the Manual trigger.

- The HTTP Request node downloads a Chinook example database as a zip archive;

- The Compression node extracts the content using a decompress operation;

- Finally, the Read/Write Files from Disk node saves the .db file locally.

Run this part manually only once.

Step 2. Receive the chat message and load the local SQLite file

The lower part of the workflow is a bit more complex, let’s take a closer look at how it works.

- The first node is a chat trigger. This is where you can send queries, such as "What is the revenue by genre?"

- Immediately afterwards, the local chinook.db is loaded into the memory.

- The next Set node combines the binary data with the Chat Trigger input. Select the JSON mode and provide the following expression:

{{ $('Chat Trigger').item.json }}. Also, turn on the "Include Binary File" toggle (you can find it by clicking on Add Options).

Step 3. Add and configure the LangChain Agent node

Let’s take a look at the LangChain Agent node

Select the SQL Agent type and SQLite database source. This allows you to work with a local SQLite file without connecting to remote sources.

Make sure that the Input Binary Field name matches the binary data name.

Save the rest of the other settings, close the config window and connect 2 additional nodes: the Windows Buffer Memory node - to store past responses - and the Model node, such as the OpenAI Chat model node. Choose a model (i.e. gpt-4-turbo) and set the temperature. For coding tasks, it is better to use lower values, such as 0.3.

Now you can ask various questions about your data, even non-trivial ones! Compare the following user inputs:

- "What are the names of the employees?" requires just 2 SQL queries VS

- "What are the revenues by genre?", where the agent has to make several requests before arriving at a solution.

This agent can still be improved, e.g. you can always pass the schema so the agent doesn’t waste resources figuring out the structure every time.

FAQ

Can LLM act as an AI agent?

While LLMs cannot act as standalone AI agents, they are one of the agents’ key components. Let’s see why.

Modern AI research revolves around neural networks. Most networks were only capable of performing a single task or a bundle of closely related tasks: a great example is DeepMind's Agent57, which could play all 57 Atari games with a single model and achieved superhuman performance on most of them.

This all changed with the advent of modern transformer-based large language models.

Early GPT (generative pre-trained transformer) models could only serve as fancy chatbots with encyclopedic knowledge. However, as the number of model parameters increased, almost every modern LLM grasped many ideas purely from textual data. Simply put: no one specifically trained the model to translate text or fix code, and yet it got by.

Mixing a huge amount of training data and a large number of model parameters was enough to “bake in” many real-world ideas into the model.

Through further fine-tuning, these transformer-based models were able to follow instructions even better.

All this incredible progress allows you to create AI agents with just a set of sophisticated instructions called prompts.

Is ChatGPT an AI agent?

ChatGPT is an impressive system that combines LLM capabilities with several built-in tools, including web browsing, data analytics, and recently added scheduled tasks. It exhibits some rudimental agentic behaviour, but still lacks crucial components. For example, ChatGPT is not yet fully autonomous and requires human input at each iteration.

The choice between ChatGPT and custom AI agents depends on your needs and usage scenarios. ChatGPT is great for general conversational AI, content creation and broad applications. For specialized tasks, real-time data processing and integrated system solutions, custom AI agents might be a better fit.

What are multi-agent systems?

Multi-agent systems are environments in which multiple AI agents work together, each performing specific tasks while coordinating their actions. Think of it as a team of specialists: one agent can handle customer requests, another processes data, and a third manages scheduling. These agents communicate and collaborate to achieve complex goals that would be difficult for a single agent to accomplish.

How do AI agents learn and improve over time?

AI agents can learn and improve in several ways without necessarily changing their underlying AI models:

- Few-shot learning is a technique where agents learn from recent successful interactions and use them as examples for similar future cases. For instance, a customer service agent can use recent successful responses as templates for new queries.

- Retrieval-Augmented Generation (RAG) may be required to work with company-specific information. By building and accessing an expanding knowledge base, agents can provide more accurate, contextual responses over time without modifying their underlying AI models.

- Prompt optimization involves storing successful prompts and their outcomes in a vector database, then automatically adjusting prompt templates based on performance metrics. For example, if certain prompt structures consistently lead to higher user satisfaction ratings, the agent will favor these patterns.

For more advanced scenarios involving LLMs, learning can happen at the model level through:

- Continuous pre-training is the process of updating the model with new domain data to expand its knowledge in specific areas, while;

- Fine-tuning involves adjusting the model for better performance on specific tasks.

However, not all AI agents need sophisticated learning capabilities. For some tasks, agents with fixed behaviours and clear performance metrics are better suited.The key is to tailor the learning approach to your specific needs.

Wrap up

In this guide, we briefly introduced what an AI agent is and how it works, what types of AI agents exist and what the benefits of using one are.

We’ve also gone through some AI agent examples and showed how to create a LangChain SQL agent in n8n that can analyze a local SQLite file and provide answers based on its contents.

What’s next?

Now that you have an overview and a practical example of how to create AI agents, it’s time to challenge the status quo and create an agent for your real-world tasks.

With n8n’s low-code capabilities, you can focus on designing, testing and upgrading the agent. All the details are hidden under the hood, but you can of course write your own JS code in LangChain nodes if needed.

Whether you work alone, in a small team or in an enterprise, n8n has a lot to offer. Choose from our cloud plans and jump-start right away or explore powerful features of the Enterprise edition. If you’re a small growing startup, there is a special plan for you on the pricing page.

Join our community forum and share your success or seek support!