This Verified Node Spotlight was written by Vytenis Kaubrė, Content Researcher and Technical Copywriter for Oxylabs.

Web scraping sounds simple until you hit anti-bot systems, CAPTCHA challenges, and IP blocks. Building reliable pipelines demands expertise HTTP request strategies, HTML parsing, and infrastructure scaling. The technical overhead can prevent many automation projects from launching.

Read this article to learn how to build a fast, deep research agent using Oxylabs AI Studio in n8n. No coding required. Automatically handle sophisticated anti-scraping systems, parse any website, and scale reliably for production use.

What Is Web Scraping?

Web scraping is the automated extraction of public data from websites. Instead of manually copying information, scripts or tools programmatically retrieve and structure the data you need. Traditionally, this is done by writing scripts in programming languages like Python, JavaScript, or C#, though modern solutions offer simpler approaches.

Common use cases:

- E-commerce: Track competitor pricing and product availability in real-time

- AI development: Collect large datasets for training machine learning models

- Market research: Gather consumer behavior insights and industry trends

- Brand protection: Monitor online platforms for counterfeit products and trademark violations

- SEO monitoring: Track keyword rankings and analyze competitor performance

- Travel aggregation: Collect flight prices, hotel rates, and customer reviews

- Ad verification: Ensure ads display correctly across different platforms

Top Challenges of Web Scraping

Extracting public data from the web presents several obstacles:

- Technical expertise barrier: Requires proficiency in programming, HTTP protocols, and HTML/CSS selectors

- Anti-bot defenses: Websites deploy CAPTCHAs, IP blocking, and fingerprinting that require sophisticated bypassing techniques

- Data extraction complexity: Each website structures HTML differently, demanding custom parsers that break when sites redesign

- Infrastructure scaling: Large operations need distributed systems, proxy rotation, and monitoring that divert resources from core business goals

Building a Fast Deep Research Flow in n8n

These challenges don't mean web scraping is impossible. The right tools can handle the complexity for you, which is exactly what this workflow does.

Oxylabs AI Studio is a low-code web scraping solution that eliminates technical barriers. Instead of writing code, you describe what data you need in plain English. It has built-in proxy servers, automatic data parsing, a headless browser, and scales on demand. Oxylabs AI Studio integrates with n8n as both a node and a tool, providing purpose-built resources for different scraping needs:

- Search: Scrape Google Search and optionally extract content from each result

- Scraper: Scrape any website and get Markdown or structured JSON/CSV by describing your needs in plain English

- Crawler: Crawl entire websites to find relevant pages using natural language prompts

- Browser Agent: Control a web browser with natural language and extract data

Project Overview

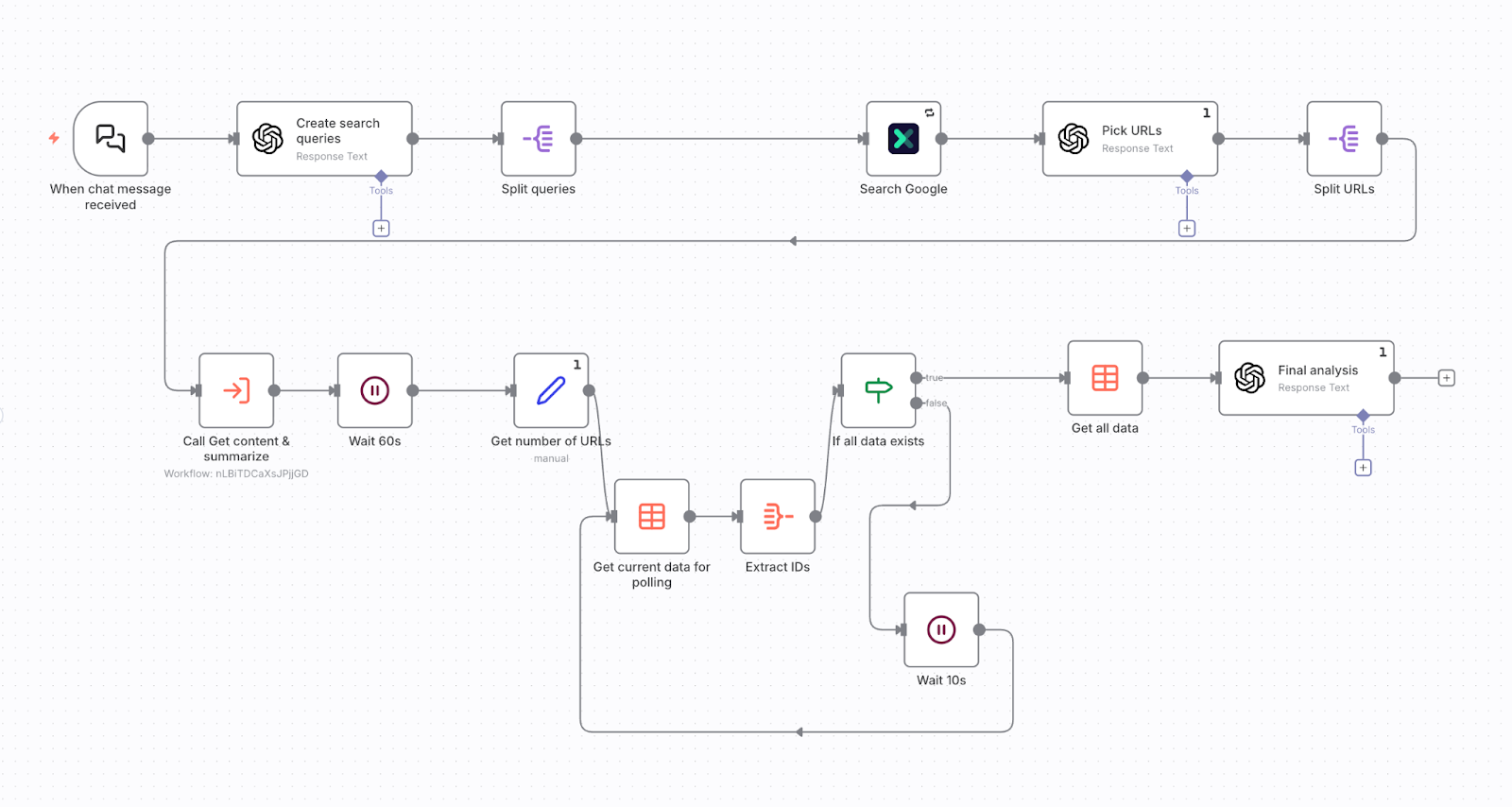

This deep research workflow:

- Analyzes the user's question and generates 3 strategic Google search queries

- Scrapes Google search results for each query

- Identifies the most relevant and authoritative sources for analysis

- Scrapes and summarizes each source in parallel

- Produces a comprehensive analysis report combining all insights

Prerequisites

You'll need just two things in addition to your n8n instance:

- Oxylabs AI Studio API key – Get a free API key with 1000 credits

- OpenAI API key (or alternatives like Claude, Gemini, or local Ollama LLMs)

Follow along this tutorial in n8n.

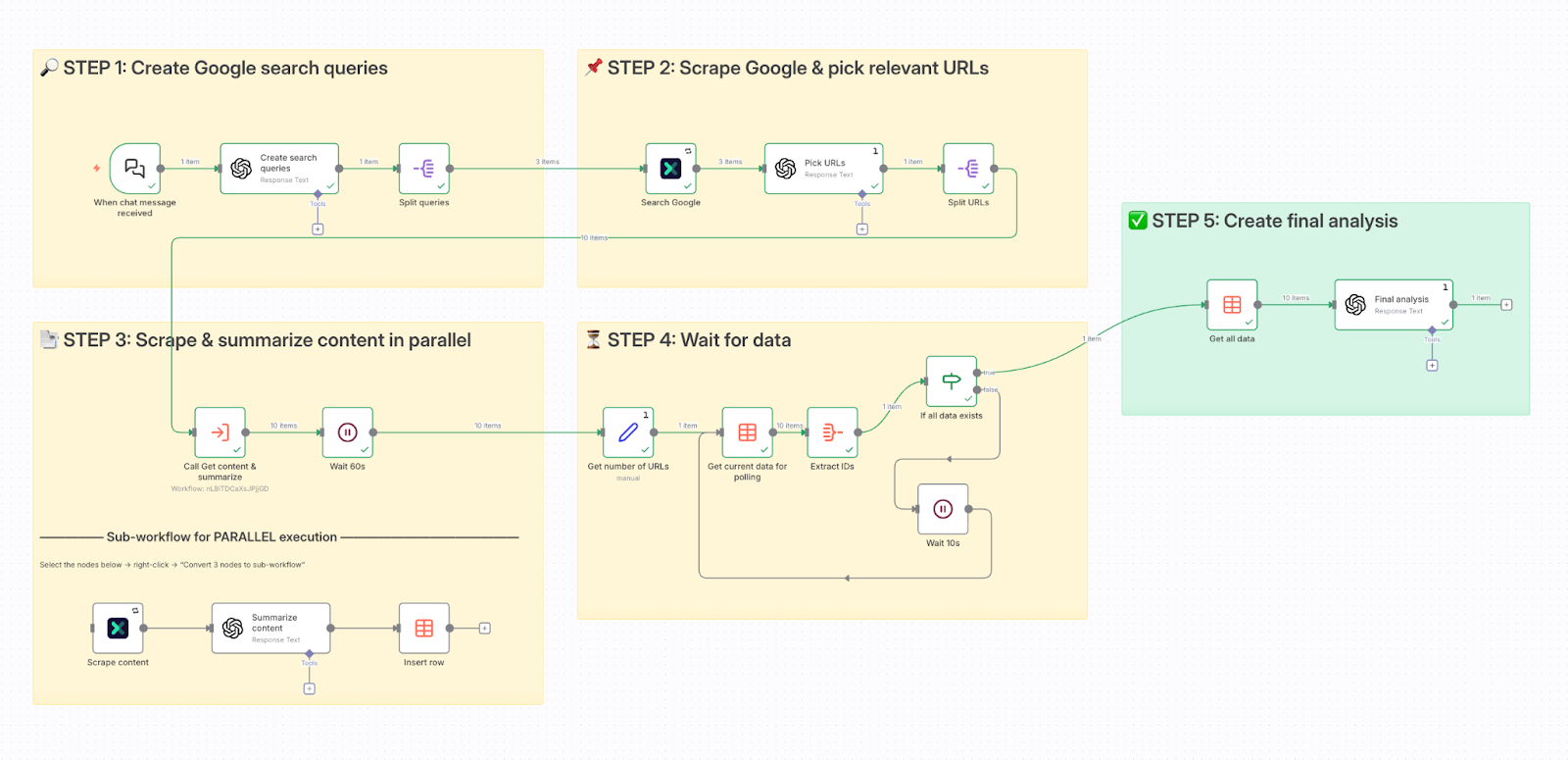

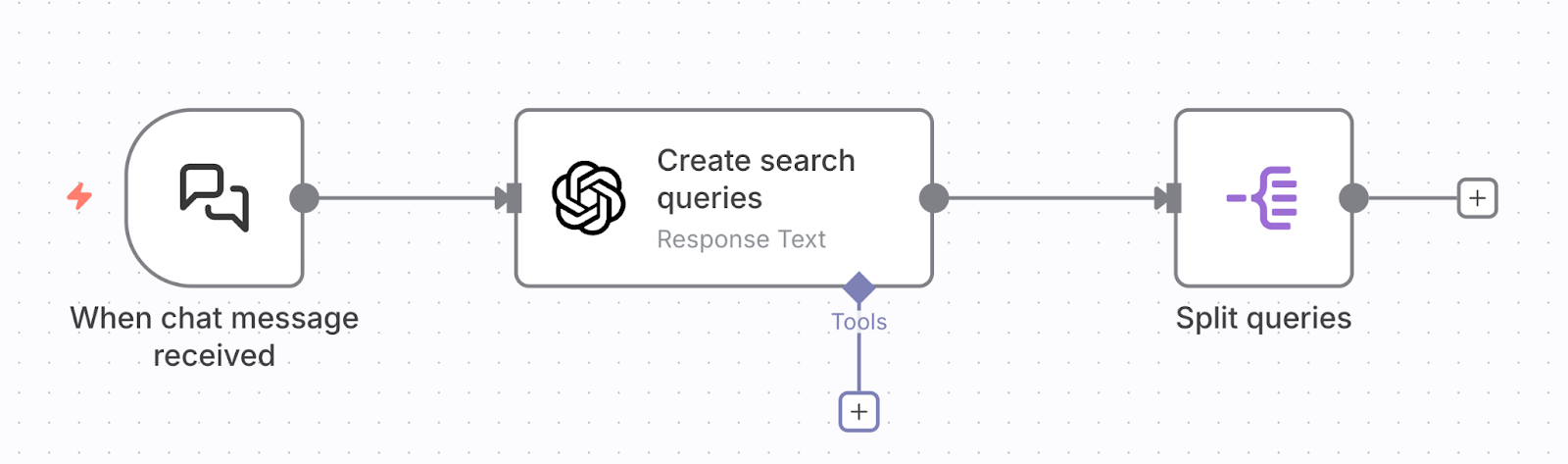

Step 1: Create Google Search Queries

- Add the When chat message received trigger node

- Add the OpenAI node > Message a model action

- Add the Split Out node

This setup lets you input any message through n8n's chat interface. The LLM then analyzes your message and generates strategic Google Search queries to uncover different aspects of your topic. The system prompt creates 3 search queries by default (adjust this number as needed).

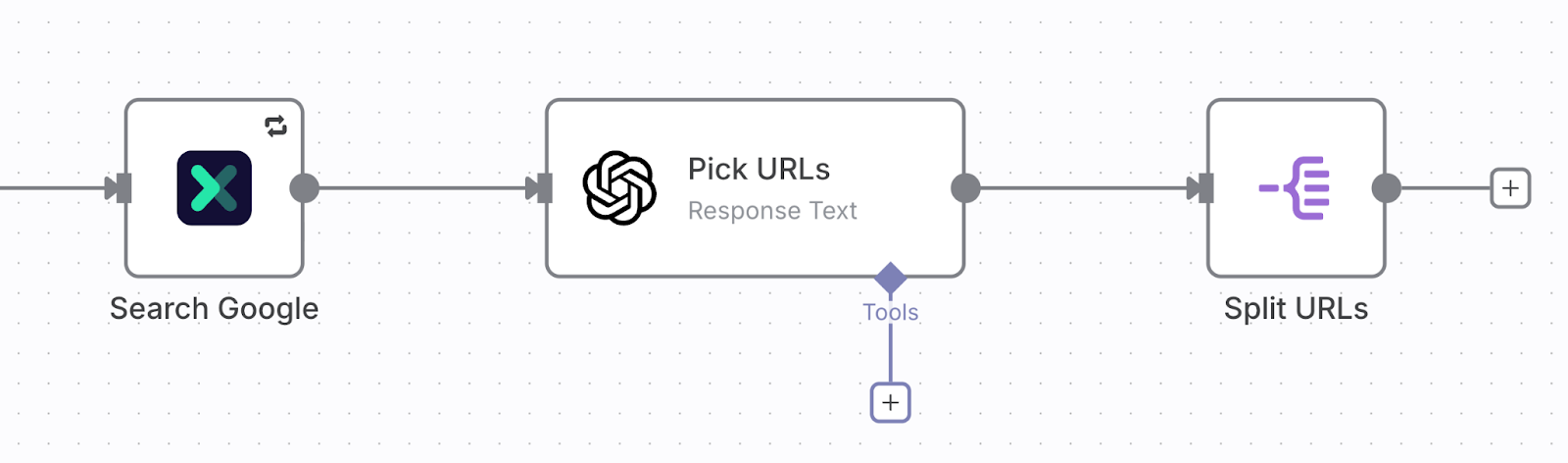

Step 2: Scrape Google & Select Relevant URLs

- Add the Oxylabs AI Studio node > Search resource

- Add the OpenAI node > Message a model action

- Add the Split Out node

Here, we scrape Google SERPs using the generated search queries, then filter for the most relevant and authoritative sources.

If you haven't already, install the Oxylabs AI Studio node as shown on this page and add it to your workflow. Remember, you can claim a free Oxylabs AI Studio API key with 1000 credits.

The Oxylabs AI Studio Search resource offers powerful capabilities:

- Limit: Returns up to 50 search results per query

- Return Contents: Extracts content from each search result

- Render JavaScript: Uses a headless browser to capture dynamic content

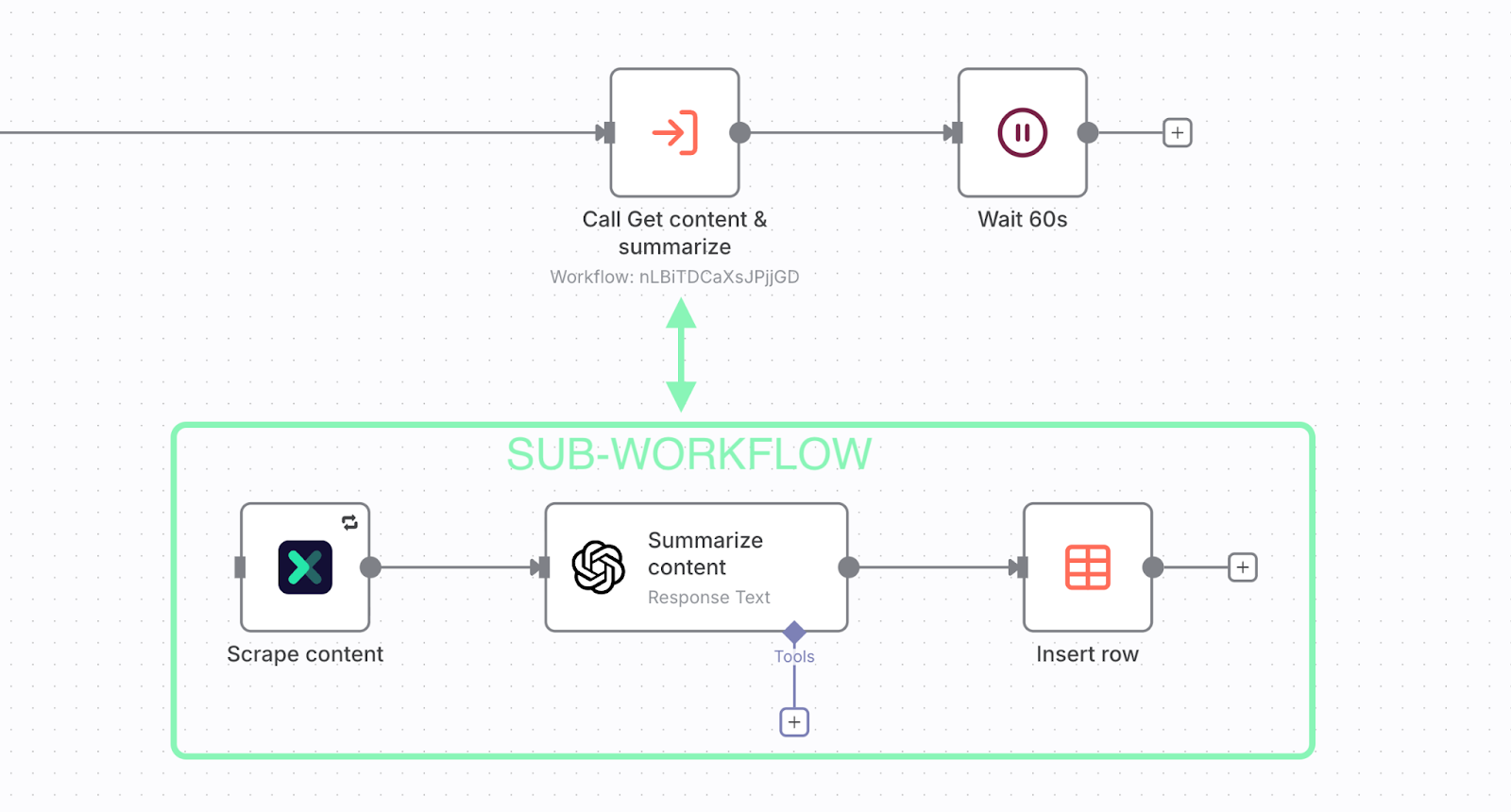

Step 3: Scrape & Summarize Content in Parallel

- Add the Oxylabs AI Studio node > Scraper resource

- Add the OpenAI node > Message a model action

- Create a data table

- Add the Data table node > Insert row action

- Convert these 3 nodes into a sub-workflow

- Add the Wait node

In this step, Oxylabs AI Studio scrapes each selected URL and returns content in clean Markdown format instead of raw HTML. To ensure quality analysis, we summarize each piece of content to extract key insights, then save each summary as a row in n8n's data table.

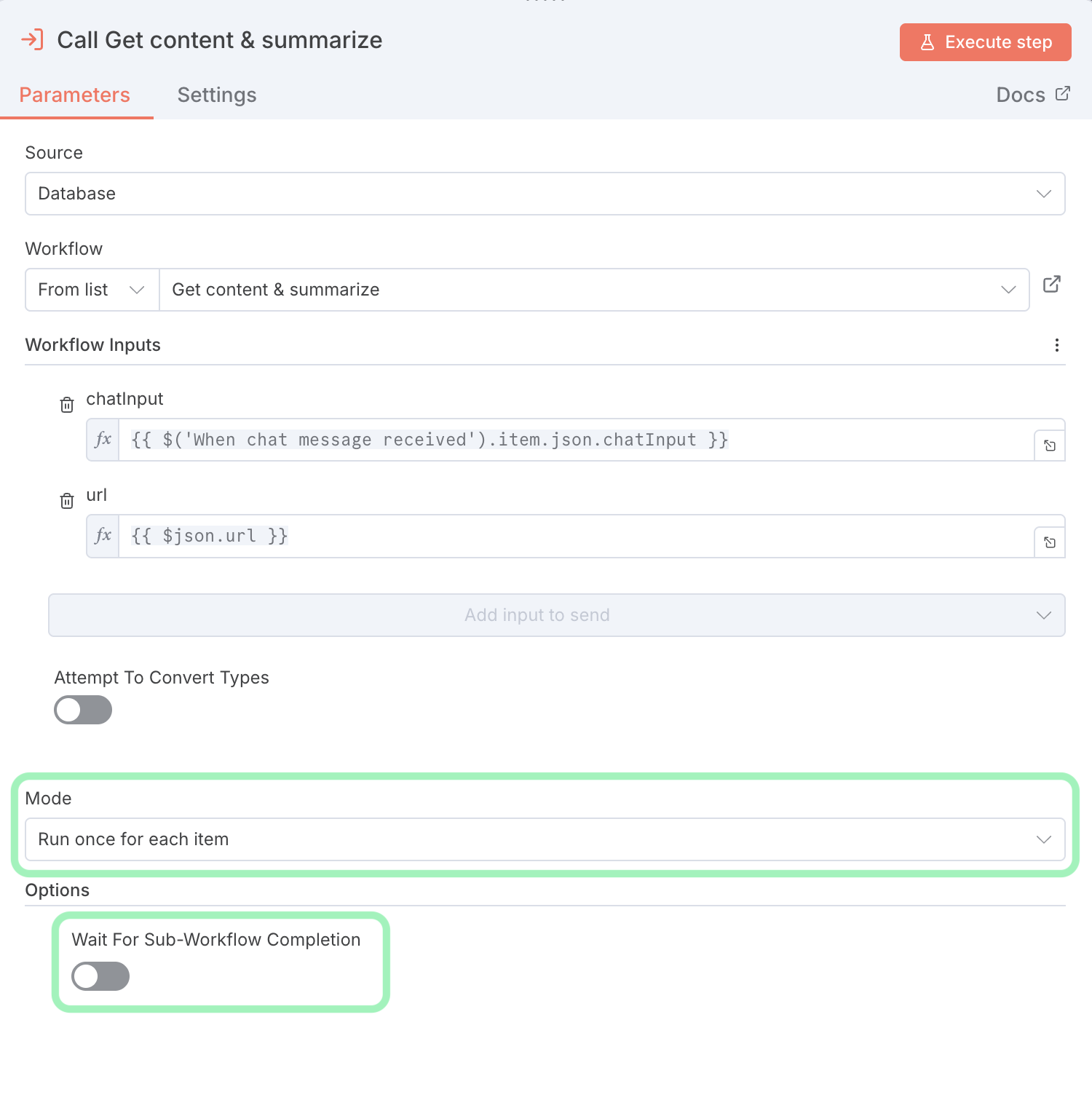

To dramatically speed up processing, save the 3 nodes as a sub-workflow. This will ensure that each URL is scraped and analyzed in parallel at the same time, instead of one by one. After creating the sub-workflow, enable parallel processing with these settings:

- Mode: Run once for each item

- Options > Add Option > disable “Wait For Sub-Workflow Completion”

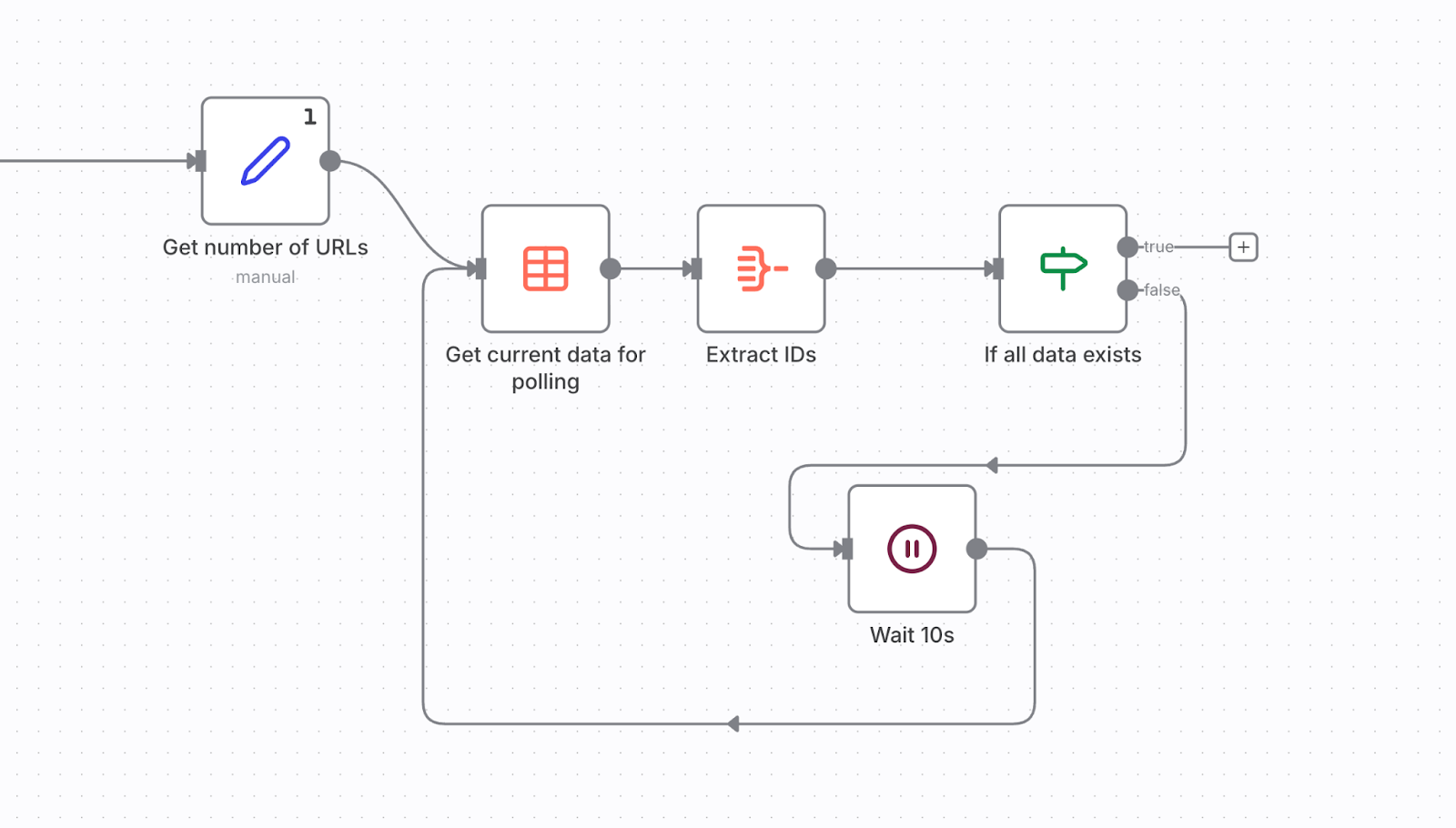

Step 4: Wait for Data

- Add the Edit Fields (Set) node

- Add the Data table node > Get row(s) action

- Add the Aggregate node

- Add the If node

- Add the Wait node

- Loop through 2, 3, 4, and 5 nodes

Since the sub-workflow runs in parallel, we need to wait for completion. While you could add a fixed Wait node that waits for 2-3 minutes, the dynamic approach is better. It checks whether the expected number of results exists in the data table by comparing the number of URLs sent to the sub-workflow against the last row ID. If they don't match, it waits 10 seconds and checks again. When they match, the loop exits for final processing.

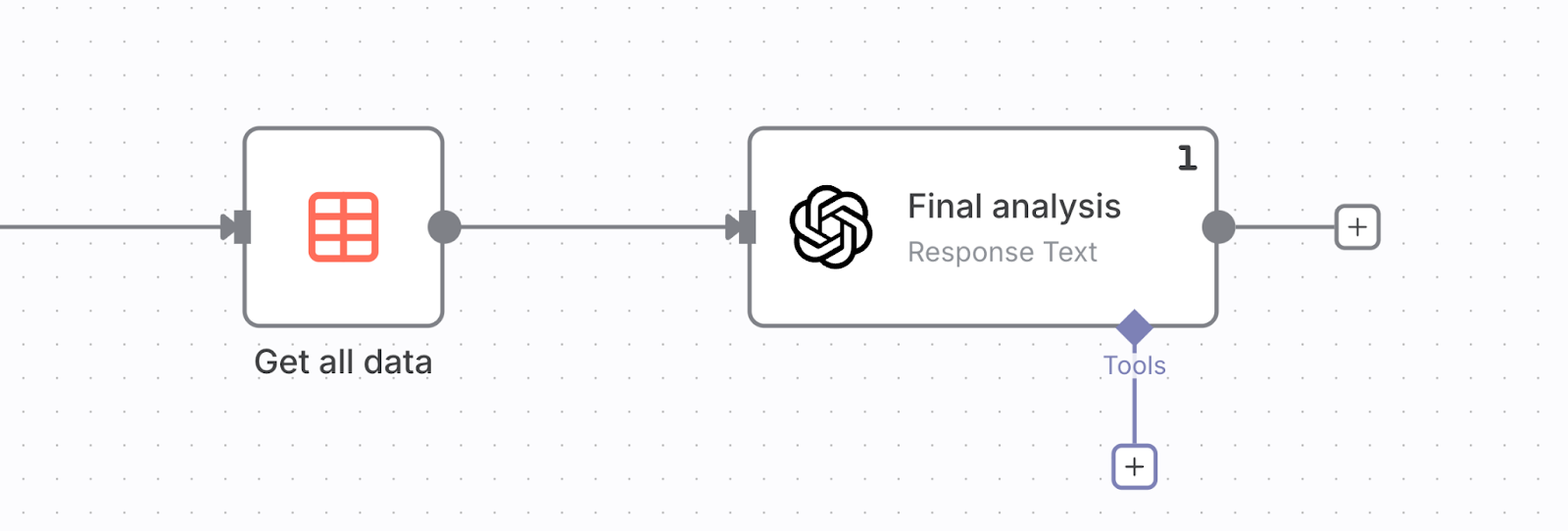

Step 5: Create Final Analysis

Connect these nodes to the True branch of the If node:

- Add the Data table node > Get row(s) action

- Add the OpenAI node > Message a model action

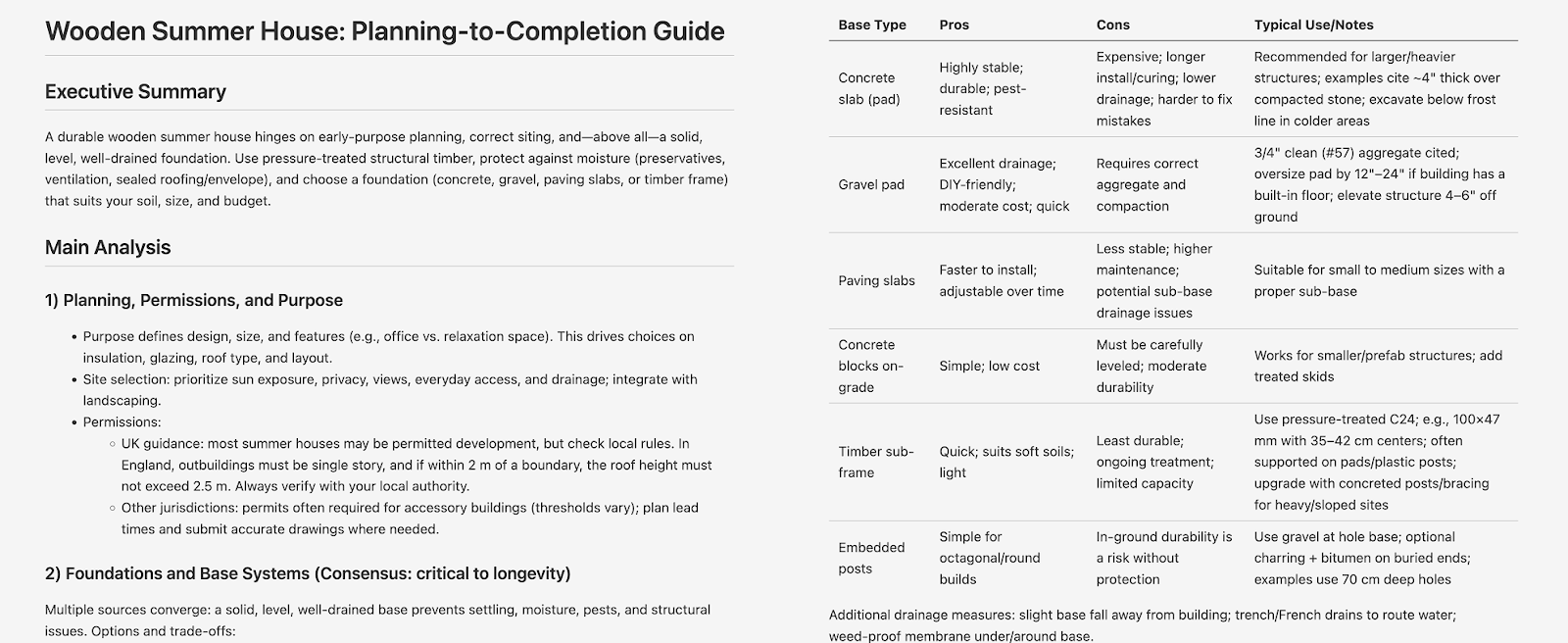

Once all summaries are ready, we read the entire table and pass the data to AI for synthesis. It creates a comprehensive and actionable report structured in Markdown. Here's an example output snippet about building a summer house:

To reuse this workflow, remember to clear the data table after the final analysis by adding a Data table node with the Delete row(s) action.

Next steps

The combination of n8n and Oxylabs AI Studio eliminates technical barriers: no proxy management, no anti-bot workarounds, no parsers and scrapers to maintain. Feel free to use this workflow as a foundation for your own automation pipelines.

Try it now by downloading the template from n8n.

Ways to expand:

- Connect nodes like Google Sheets, Notion, Airtable, or webhooks to route results where you need them

- Explore other AI Studio apps like Browser Agent for interactive browser control or Crawler for mapping entire websites

- Adjust the system prompts in LLM nodes to fit your specific research goals

- Scale up by processing more search queries, increasing results per query beyond 10, and selecting additional relevant URLs

Useful resources

Oxylabs AI Studio applications

Interested in building your own node so others can install it directly from the n8n Editor?