AI is the not-so-secret sauce behind so many successful products, and we’re proud of how n8n is democratising AI for all end users – whether you’re an engineer, data scientist, product manager, or just a curious hacker. But when it comes to working with AI, your workflows suddenly become less predictable.

AI Evaluations are a foundational practice for building with AI, turning guesswork into evidence and helping you understand whether updates and changes – like prompt tweaks, model swaps, or edge case fixes – actually improve your results or introduce new issues.

While tools like LangSmith are helping teams to debug, test, and monitor AI app performance – the learning curve is steep. That’s why we’re excited to bring Evaluations for AI workflows right to your canvas. Now evaluations can be spun up as part of your AI workflows in n8n, for straightforward and less error-prone implementations, all on the same platform that you know and trust.

What are Evaluations for AI workflows?

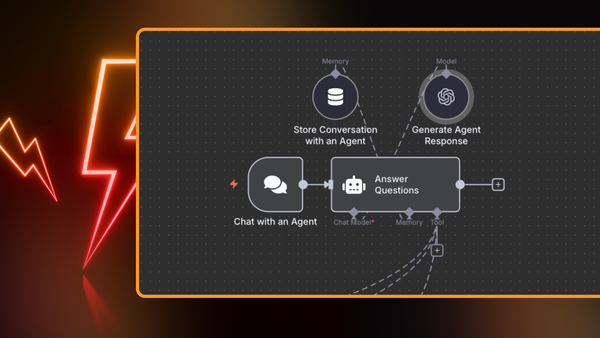

Our Evaluations for AI workflows lets you run a range of inputs against your workflow, observe the outputs, and apply metrics that are completely customizable. You can measure anything relevant to your use case, whether it’s correctness, toxicity and bias, or whether the agent called the right tool. This data allows you to analyze the effect of specific changes and to compare performance over time. In n8n, an evaluation is added as a dedicated path in your workflow that can be executed separately from other triggers, so you can focus on testing and iteration without disrupting production logic.

To use Evaluations for AI workflows, you’ll need version 1.95.1 or higher. Check the release note for an overview of how to implement an evaluation, or explore the Evaluations documentation for detailed guidance, troubleshooting tips, sample datasets, and evaluation workflow templates.

Why AI Evaluations?

Update and deploy with confidence, even to production.

From real-world inputs and challenging edge cases, to structuring all the data that your AI will ingest, running AI workflows reliably over time takes work. Prompt engineering is a great example – sometimes you change a prompt to find that it proves one use case, but it makes three others worse. We wrote about this painful process building our own AI Agent. AI Evaluations are a dedicated testing path within your workflow that keeps you on the right track, so you can rerun tests at any time to validate changes, speed up iteration, and deploy with confidence.

Experiment and iterate faster

AI workflows contain multiple moving parts, all of which you might want to tweak. But any tweak to your workflow, however small, can impact the output of your LLM or AI Agent. That’s where AI Evaluations come in – enabling you to confidently experiment with updates and changes, knowing that your end users aren’t going to be impacted by unexpected outputs.

AI Evaluations also empower you to test new prompts faster. Prompts are unpredictable. Any changes can vastly affect the accuracy and tone of outputs. Whenever you change a prompt in your automation, you need to be sure that you’re not inadvertently making things worse for end users.

Test alternative LLMs

We’re losing count of the number of new and updated AI models launching. (Just look at this review from Hugging Face last year!) Beyond sheer volume, updates to LLM models can introduce subtle changes under the hood that directly impact your outputs. The big question is, when is the right time to switch or upgrade, and which model should you opt for?

AI Evaluations empower you to make educated decisions, faster. Whether you want to improve speed, cost-efficiency, accuracy, or simply test whether a new model lives up to its promises, AI Evaluation puts you in the driving seat.

Keep quality high

Quality matters – to you, and to your end users. When you’re relying on AI workflows to deliver key data, it’s critical that you can trust the quality of the outputs. That’s why we were determined to craft an evaluation tool that is intuitive to use and flexible for all use cases.

How to get the most from AI Evaluations

From Prompt to Production: Smarter AI with Evaluations

Get an in-depth look at AI evaluation strategies and practical implementation techniques from n8n host Angel Menendez and expert guest, Elvis Saravia, Ph.D., a leader in AI research.

Use comparative questions in your prompts

Through much trial-and-error, we’ve found that comparative questions yield far more useful insights than absolute scoring systems. So rather than asking an LLM to grade an output on a scale from 1 to 10 (which introduces subjective interpretation), instead ask questions as direct comparisons ("Does the new output contain the correct information?"). You’ll find you’ll get more consistent and actionable feedback.

To get the most robust evaluation, try to incorporate deterministic metrics, such as token count, execution time, number of tool calls, or verification that specific tools were invoked. These metrics provide unambiguous data points that complement qualitative assessments.

If you use these two approaches in tandem, you’ll combine the nuanced understanding of LLM evaluations with the reliability of quantifiable metrics, giving you a truly comprehensive view of performance improvements.

Ensure you use robust datasets

The accuracy of your AI Evaluations depends on the datasets you test. Real-world data that’s already flowed through your workflows is a great way to uncover authentic insights. Past executions capture the full context of how your workflows operate in practice – including edge cases, unexpected input formats, and varying data volumes that might be difficult to anticipate if you’re creating test data manually. Using historical data also gives you a reliable benchmark for comparing performance improvements over time, so you can objectively measure the impact of your optimizations.

How we built Evaluations for AI Workflows

We built our AI Evaluations tool atop n8n’s execution engine – the same robust infrastructure that powers standard workflow executions. We did this for a few reasons. Firstly, we wanted to ensure consistent behavior between production and evaluation workflows. Second, this allows us to leverage core components, functionalities, and mental models that n8n users are used to, so you benefit from the existing familiarity of n8n's execution patterns.

Using workflows to evaluate workflows, we've created a meta-layer that demonstrates the flexibility and power of n8n. It also saves us future heavy lifting – as we enhance the core execution engine, the improvements automatically benefit the evaluation framework without requiring additional development effort.

Full disclosure – we made a rookie error in underestimating the scope of this project! We envisaged a few weeks of development, but it quickly rolled into a multi-month initiative as we uncovered additional requirements and refined our approach.

The biggest challenge was distilling such a complex project into an intuitive user experience. Evaluation frameworks inherently involve multiple components – test cases, metrics, execution contexts, and result analysis – which can quickly become overwhelming, even for the most experienced teams. We’re grateful to all of our end user guineapigs who participated in extensive interviews, which ultimately drove us to reenvision our approach multiple times, progressively simplifying the interface while preserving functionality.

What did we learn?

Test early and often

Our early, targeted testing with selected end users was invaluable. Their feedback drove us to really hone the UX/UI and meant we launched a tool that leverages similar logic to other n8n workflows, making it far easier to get started.

Distilled complexity is never going to perfect

Packaging AI’s complexity into an intuitive interface was our biggest challenge in this project, and our UX/UI is still a work-in-progress as we strive for simplicity, despite the sophisticated underlying logic.

We ❤️ n8n

Leveraging the execution engine in new ways has given the team a renewed appreciation for n8n's versatility!

Let us know what you think! How can we improve our Evaluations for AI workflows even further?