We like drinking our own champagne at n8n, so when it came to rebuilding our internal AI assistant, we decided to see if we could do it using our own tooling. And as engineers who think in code, it was an enticing challenge to step away from the command line and experiment on building with workflows. It took us a few months, but we did it, and pretty successfully too! Plus, we learned some valuable lessons along the way. If you’re planning on building AI tools, we hope our endeavors will prove just as useful to you as they have for us.

Hard-code, hard iterations

We’d been running a hard-coded internal AI Assistant for some time, but it was tricky to iterate on and far too complex for our PM colleagues to engage with. If you wanted to tweak the AI logic, improve a prompt, or just saw a potential efficiency gain, you needed to dive deep into the code. All-in-all, it was a solid piece of engineering but inaccessible to the folks who could benefit from it the most.

We liked the tooling we’d used for the original AI Assistant, so we knew we’d keep LangChain for orchestration and have everything running through GPT-4. But ultimately, we wanted to see if such a complicated AI use case could be built purely on n8n.

The AI Assistant

n8n’s Ai Assistant has three use cases:

- Debugging user errors

- Answering natural language questions in a chat format

- Helping users set up credentials

At the backend, we run two huge vector sources that make up an internal Knowledge Base (KB) – one is our documentation, the other is n8n’s Forum. We instruct our assistant to read the documentation first, to prevent hallucinations, and then turn to the Forum for further insights.

We set up our data in chunks. Each chunk is saved with context so the assistant can understand what part of a document it's reading, and the wider context surrounding it. Of course, we automated n8n workflows to scrape the documentation three times a week to update the database. At the same time, we also scrape the Forum for questions that have corresponding answers, and couple them together in the KB. Drinking our own champagne ;-)

Both the AI Service and our internal instance where the workflows live have development and production environments, so we can work on them without changing the production versions directly.

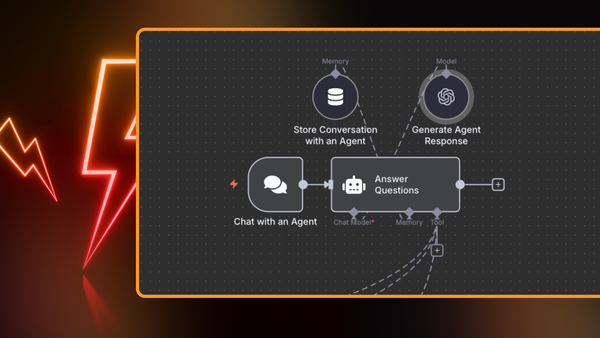

Here’s an overview of the main assistant components:

- n8n Front end: All messages sent by users from the assistant chat sidebar are first sent to our AI Service, which is a separate web service hosted internally.

- AI Service: Handles authentication for the incoming requests and calls n8n webhook also with authentication so we make sure workflow accepts only requests from the AI Service

- Assistant Workflows: Hosted on our internal instance. There is one main (Gateway) workflow that accepts webhook calls and routes to a specific agent, based on user mode.

Under the hood we have four distinct agents that handle the Assistant’s use cases. We chose this setup because each use case requires different input context and tooling. When a user initiates the Assistant, we route the request using n8n's Switch node to one of the four agents. The only downside of this approach is that once an agent has started a user chat, it cannot switch to another agent while in-session, so the user needs to start again.

Debugging user errors

Generic error helper: Initiated when users click Ask Assistant button from node output panel when there is an error in the node. This agent is specialized in debugging node errors, has context about the error and access to n8n documentation and forum answers

Code node error helper: Specialized in debugging errors in the Code Node. This agent is initiated when users click Ask Assistant button from node output panel when there is an error in the Code Node. It has context about user’s code and access to n8n documentation. Beside answering questions this agent also can suggest code changes and apply them to currently open node if user chooses so.

Answering natural language questions in a chat format

Support agent: Responds to user requests when they just open up a chat and start asking questions. This agent has context about what users are currently seeing (workflow and nodes) and has access to n8n documentation and forum answers

Helping users set up credentials

Credentials helper: Initiated when users click on Ask Assistant button from the credentials modal. This agent has context about users’ current node and credentials they want to set up and has access to n8n documentation

Implementation

One of the trickiest things about working with AI is that the responses it produces can be completely unexpected. Ask a question one way, and you’ll get a correct answer. Switch something as small as a name or number in the prompt, and you can end up with a wildly different response.

To mitigate this we started small, testing an agent on a user prompt like “why am I seeing this?”. We quickly realized that we needed to provide more context because the assistant would not necessarily digest what was on the user’s screen before searching the KB. So we created a “workflow info” tool, which enables the Assistant to gather information about a specific workflow.

Now when users ask something about their workflow, or why they're seeing something specifically without explaining it, the assistant can use the “workflow info” tool to pull the error or the process from the context that is on the user’s screen. Today our support chat is delivering accurate answers by looking at the schemas the user is viewing, and using this as part of its search. We’ve included an example below, so you can see how the agent has access to different tools to debug user problems.

AI to evaluate AI

We save executions, so all our traces are available directly in n8n, which means we have a fantastic set of internal traces for evaluating tests and prompt changes. Since we have a rich dataset of traces, we thought ‘why not use AI to fast-track the iteration process?’ and rolled out an LLM to judge the responses our LLM was producing.

However, we made a rookie error in giving the same instructions to both the Assistant and the Judge: make responses helpful, actionable, and brief. This resulted in the Judge scoring every answer perfectly. So we iterated a bit on the framework.

Internally, we have a custom validation project set up in LangSmith where we can run the Assistant against different sample requests from our traces and get a score on answer quality. This enables us to test different prompts and models quickly. It took a lot of experimentation to arrive at a framework that works reliably, and of course, every time we change something we have to test it. But now we have almost 50 use cases and we can more accurately test how our changes are improving or decreasing the quality of responses.

Today, the LLM Judge receives a user prompt and the output from the Assistant, and it judges and scores the output against a set of instructions, such as ‘should X be included, is this a high-priority, is this output actionable?’ etc. So far, the quality seems to be a bit better than our old model!

Lessons learned

Time lag is ok

Something we realized early on is that users don’t mind waiting a few seconds for useful responses. We assumed that an agent taking 10 seconds to stream a response would be a barrier to adoption, but it's not at all. So even though we’ve noticed a slight increase in response times, we haven’t seen a decrease in error resolution.

Iterate, iterate, iterate!

As engineers, we often lean on our gut feelings in code – you can see where to make tweaks and see how the program responds. But with AI, you can try asking the same question in a different way and end up with a completely different answer. And sometimes you change a prompt to find that it proves one use case, but it makes three others worse!

AI is so much less deterministic, and the way you evaluate and evolve the responses you’re getting has to be approached with a different mindset. Trial and error became the new normal for us as we iterated on every aspect of our assistant to see what would work. While this took some time investment, ultimately it paid off because we now have high-quality responses, and response times just keep improving.

Be open beyond code

We’re engineers and it was admittedly a challenge to switch our engineering outlook to move from working in code, to working in workflows. This project completely changed our minds on low-code approaches – it was so much easier building and migrating our AI assistant than we’d envisaged and we’re really impressed with how successful this project has been on such a large-scale dataset.

What’s next?

Word got out pretty quickly about our new AI Assistant, and n8n’s Support Team is already leveraging the KB and AI workflows we built to see if they can improve the quality and speed of their responses.

We’re now looking at how we can increase the abilities of our AI Assistant over time – like being able to build a workflow from a prompt. And because we’ve set everything up in n8n, we can also easily experiment with different LLMs for auxiliary actions. We’re also considering introducing another AI Agent to the four that we currently run, so that users will be able to switch agents while in-session.

Give it a spin

We’re open source enthusiasts – so of course we’ve published AI Assistant workflows! Have a look at some of our popular templates below, and let us know what you think.

RAG Chatbot for Company Documents using Google Drive and Gemini

👉 This workflow implements a Retrieval Augmented Generation (RAG) chatbot that answers employee questions based on company documents stored in Google Drive.

BambooHR AI-Powered Company Policies and Benefits Chatbot

👉 This workflow enables companies to provide instant HR support by automating responses to employee queries about policies and benefits.

You can also connect with Niklas and Milorad on LinkedIn and learn directly from them.