Retrieval-Augmented Generation (RAG) is essential for building LLM applications that can access and reason over up-to-date, proprietary, or domain-specific information. By using RAG, LLMs can overcome the limitations of relying solely on their pre-trained knowledge.

Two popular frameworks for building RAG chatbots are LlamaIndex and LangChain.

This article provides a comparative analysis of LlamaIndex and LangChain, highlighting their core strengths, key differences, and ideal use cases. We will then introduce n8n as a LangChain alternative. n8n is a powerful, low-code automation software, particularly well-suited for RAG workflows that require extensive integrations and visual workflow design.

So let's get straight into it!

LlamaIndex vs LangChain: A comparative analysis

Here's a comparative table to summarize the key differences between LlamaIndex and LangChain:

| Criteria | LlamaIndex | LangChain |

| Primary focus | Data connection, indexing, and querying for RAG. |

Building and orchestrating complex LLM workflows, including agents and chains. |

| Ease of use | Easier to learn and use, especially for beginners. High-level API simplifies common tasks. |

Steeper learning curve. Requires a deeper understanding of LLM concepts. |

| Data ingestion | Extensive data connectors through LlamaHub (APIs, PDFs, databases, etc.). Streamlined data loading and indexing. |

Supports data loading, but focus is more on data transformation within the pipeline. |

| Querying | Sophisticated querying capabilities, including subqueries and multi-document summarization. Optimized for retrieval. |

Flexible querying, but often requires more manual configuration. |

| Flexibility | Less flexible, more opinionated. Good for standard RAG use cases. |

Highly flexible and modular. Allows swapping LLMs, customizing prompts, and building complex chains using various LangChain chain types. |

| Extensibility | Primarily through LlamaHub and custom data connectors. |

Highly extensible through custom chains, agents, and tools. |

| Customization | Some customization options, but less than LangChain. |

Highly customisable and great degree of control. |

| Free for commercial use? | Yes | Yes |

| Use cases | RAG chatbots, document Q&A, knowledge base querying, data augmentation. |

Complex reasoning systems, multi-agent applications, applications requiring integration with multiple tools and APIs. You can also use LangChain for RAG workflows. |

| Repository | LlamaIndex GitHub | LangChain GitHub |

Which is better: LlamaIndex or LangChain?

Both LlamaIndex and LangChain are powerful frameworks for building LLM-powered applications, particularly those leveraging RAG.

However, when considering LangChain vs LlamaIndex, they have distinct strengths that make them better suited for different use cases.

Ease of use

LlamaIndex generally has a gentler learning curve. Its high-level API and focus on data connection and querying make it easier to get started, especially for developers new to LLMs. For example, if you need to quickly build a RAG chatbot that answers questions over a collection of PDF documents, LlamaIndex's data loaders and index structures simplify this process considerably.

LangChain, while more powerful, has a steeper learning curve. Its modularity and flexibility require a deeper understanding of LLM concepts and the various components involved.

Data handling and indexing

LlamaIndex excels in this area. It provides various indexing strategies optimized for different types of data and retrieval needs. For instance, you can easily load data from APIs, databases, and local files, and choose between vector, tree, or keyword-based indexes.

LlamaIndex has a user-friendly approach to data ingestion, primarily through LlamaHub. This central repository offers a wide array of data connectors for common sources like APIs, PDFs, documents, and databases. This extensive and easily accessible collection of connectors significantly simplifies and speeds up the process of integrating diverse data sources into your RAG pipeline.

LangChain, in contrast, does not enforce a specific indexing approach but instead allows users to structure their own pipelines based on their preferred tools. This flexibility makes it suitable for developers who want more control over their retrieval strategies, though it may require additional setup compared to LlamaIndex’s built-in indexing mechanisms.

LangChain also supports data loading with its own set of document loaders. While it might not have a single, unified hub like LlamaHub, LangChain's data loaders are flexible and can be customized. This approach provides greater control over the data loading process, which is advantageous for developers who need to implement highly specific or custom data ingestion logic.

Flexibility

LangChain offers significantly more flexibility and control. Its modular architecture allows you to swap out different LLMs, customize prompt templates, and chain together multiple tools and agents. This is crucial if you're building complex applications, such as a multi-step reasoning system or an application that needs to interact with multiple external services.

LlamaIndex, while offering some customization, is more opinionated in its approach, prioritizing ease of use over fine-grained control.

Querying capabilities

LlamaIndex is optimized for sophisticated querying within RAG systems. It supports advanced querying techniques like subqueries (querying across multiple documents or indexes) and multi-document summarization.

LangChain offers flexible querying options, but often requires more manual configuration to achieve advanced querying patterns. You have the building blocks to create complex query chains, but you need to assemble them yourself.

Memory management

LlamaIndex offers basic context retention capabilities, allowing for simple conversational interactions. This is sufficient for straightforward RAG chatbots where maintaining short-term conversation history is needed for context.

LangChain's advanced memory management is crucial for building sophisticated conversational AI applications that require extensive context retention, understanding of conversation history, and complex multi-turn reasoning.

Can I use LangChain and LlamaIndex together?

Yes, you can absolutely use LangChain and LlamaIndex together! In fact, combining them can be a powerful way to leverage the strengths of each framework. Here's why and how:

Use LlamaIndex for data management

You can use LlamaIndex's powerful data connectors and indexing capabilities to efficiently load, structure, and index your data from various sources. This creates a robust knowledge base for your RAG system. As we mentioned earlier, while LangChain connectors are also available, LlamaIndex excels in this area.

Use LangChain for orchestration

Utilize LangChain's chains, agents, and LangChain tools to build the overall logic and workflow of your application. Integrate LlamaIndex's query engine as a tool within your LangChain workflow, allowing you to retrieve relevant information from your indexed data.

What are the limitations of LlamaIndex and LangChain?

LlamaIndex limitations

- Primarily focused on data retrieval, making it less suitable for:

- Highly complex LLM applications with intricate, multi-step workflows

- Applications requiring interactions with numerous external services

- Supports only basic context retention, which may be insufficient for:

- Applications needing extensive conversational memory

- Complex reasoning across multiple turns.

LangChain limitations

- High flexibility comes with:

- A steep learning curve, especially for developers new to LLMs

- More intricate initial setup and configuration

- Increased debugging and maintenance overhead, particularly for sophisticated applications

- Frequent breaking changes between versions, often requiring ongoing code adjustments.

An alternative to LlamaIndex or LangChain: n8n

While LangChain and LlamaIndex are powerful tools for building LLM applications, they primarily focus on a code-centric approach.

n8n offers a compelling alternative by providing a low-code environment that seamlessly integrates with LangChain. This means you can get the power and flexibility of LangChain without the complexity of managing its underlying code directly.

Why choose n8n over LlamaIndex and LangChain?

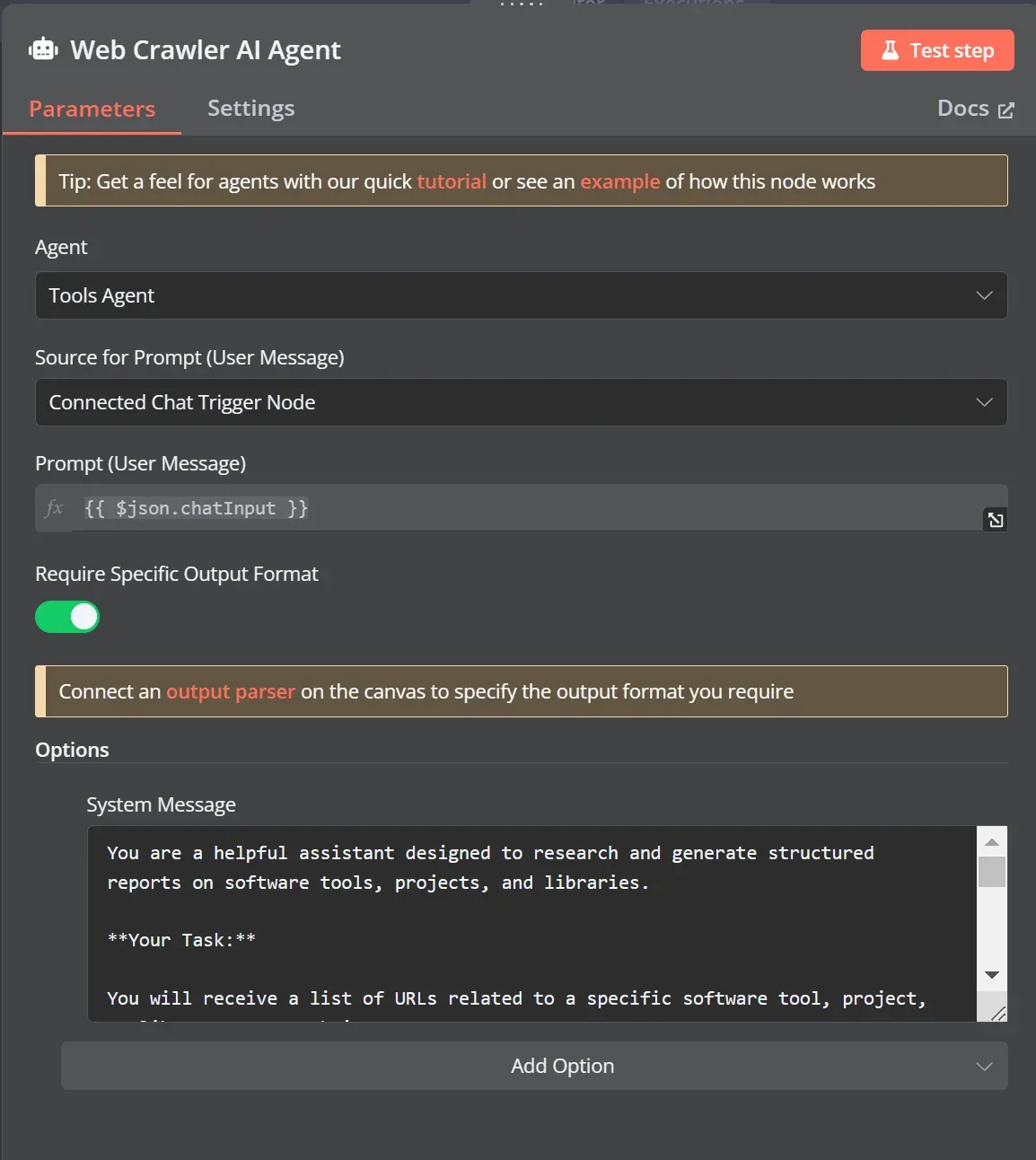

- You want LangChain's power, but prefer low-code! n8n's AI Agent node allows you to leverage LangChain's features, such as agents and memory, without writing complex Python code.

- Extensive integrations: n8n boasts 400+ integrations with various apps and services such as databases, CRMs, marketing platforms, communication tools, and virtually any service with an API. This is a significant advantage over LangChain and LlamaIndex, which primarily focus on LLM interactions. With n8n it’s also very easy to integrate vector databases, such as Pinecone, with LangChain.

- Visual workflow builder: n8n provides a user-friendly, drag-and-drop interface for building workflows, making it accessible to both technical and non-technical users. This can be a significant advantage over the code-heavy approach of LangChain and LlamaIndex.

- Native AI capabilities: n8n has built-in support for LangChain, including integrations with tools like LangChain Ollama for local LLM usage, allowing you to incorporate LLM interactions into your workflows. You configure your LangChain chains and agents within the node, and n8n handles execution and integration with the rest of your workflow. This way, you leverage LangChain's advanced features (agents, memory, custom chains) without writing intricate Python.

- Rapid prototyping and iteration: you can quickly experiment with different RAG approaches, connect to various data sources, and test different LLM configurations.

- Simplified configuration: While you can use advanced LangChain features, the visual interface simplifies configuration using the built-in LangChain templates. You set up prompts, input variables, and output handling within the LangChain node's settings.

- Hybrid approach: you can combine the ease of visual workflow design with the power of custom code using n8n's LangChain code node.

Examples of RAG workflows built with n8n

Let's explore some real-world workflow examples that illustrate the versatility of n8n for RAG and Agentic AI!

AI-Powered RAG workflow for stock earnings report analysis

This n8n workflow creates a financial analysis tool that generates reports on a company's quarterly earnings using OpenAI GPT-4o-mini, Google's Gemini AI, and Pinecone's vector search. The workflow fetches earnings PDFs, parses them, generates embeddings, and stores them in Pinecone. An AI agent orchestrates the process, using Pinecone and LLMs to analyze data and generate the report, which is then saved to Google Drive.

Complete business WhatsApp AI-powered RAG chatbot using OpenAI

This workflow allows you to create an AI-powered chatbot for WhatsApp Business that uses RAG to provide accurate and relevant information to customers. The workflow sets up webhooks to handle incoming messages, processes them, and utilizes an AI agent with a predefined system message to ensure appropriate responses. It accesses a knowledge base stored in Qdrant (a vector database like Pinecone), generates responses using OpenAI's GPT model, and sends them back to the user.

Effortless email management with AI-powered summarization & review

This workflow automates the handling of incoming emails, summarizes their content, generates responses using RAG, and obtains approval before sending replies. It listens for new emails, summarizes them, generates responses, and sends drafts for human review via Gmail. If approved, the email is sent; otherwise, it's edited or handled manually. A text classifier categorizes feedback to guide the process.

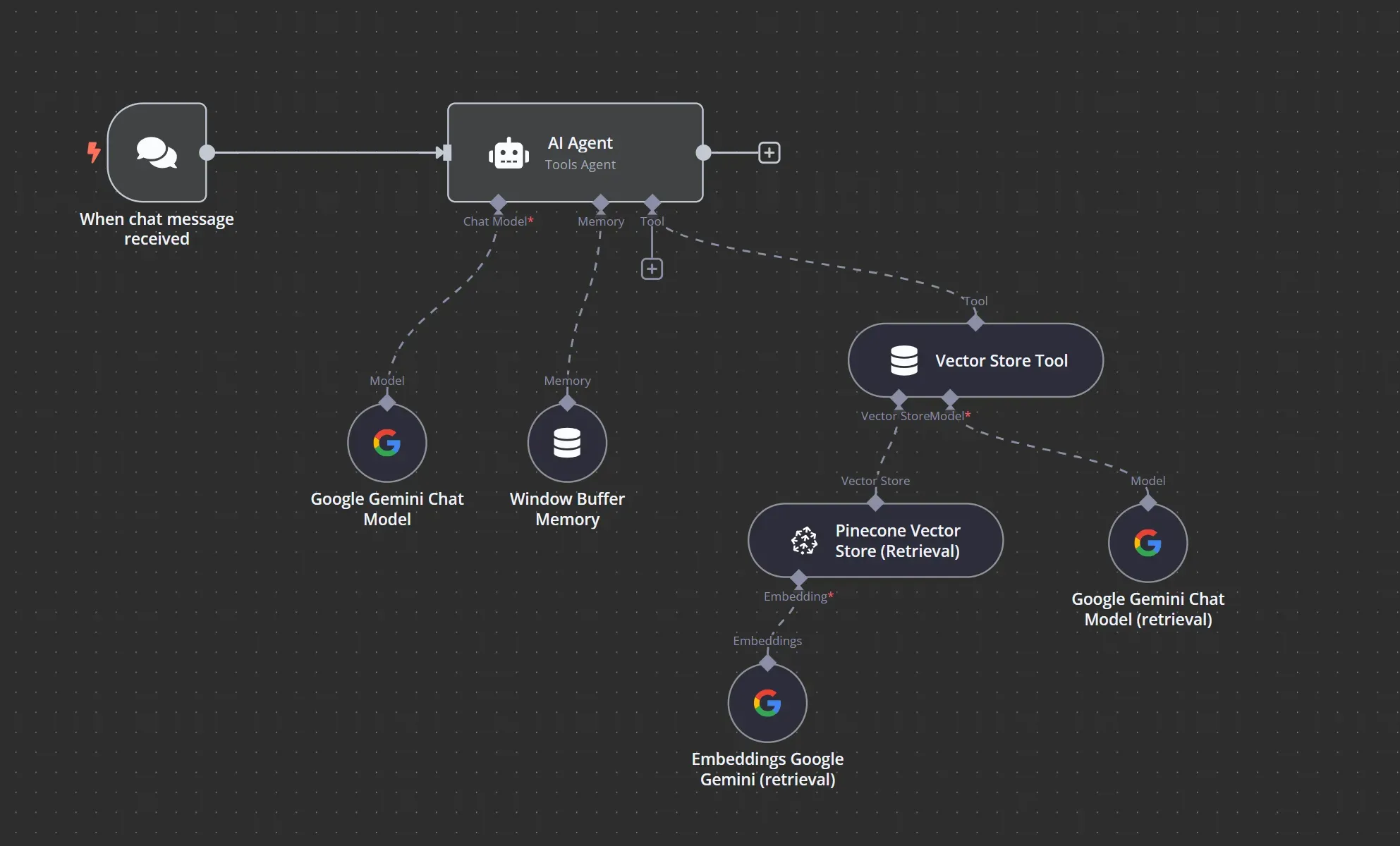

RAG chatbot for company documents using Google Drive and Gemini

This workflow implements a Retrieval Augmented Generation (RAG) chatbot that answers employee questions based on company documents stored in Google Drive. It automatically indexes new or updated documents in a Pinecone vector database, allowing the chatbot to provide accurate and up-to-date information. The workflow uses Google's Gemini AI for both embeddings and response generation. When a new or updated document is detected, the workflow downloads it, splits it into chunks, embeds the chunks using Gemini, and stores them in Pinecone. The chatbot receives questions, retrieves relevant information from Pinecone based on the question, and generates answers using the Gemini chat model.

LangChain vs LlamaIndex vs n8n FAQ

Which is better for building autonomous AI agents, Auto-GPT or LangChain?

Auto-GPT is better for fully autonomous agents that operate with minimal human input, while LangChain offers greater flexibility for building custom workflows and integrating multiple external tools and APIs.

Which tool is best for retrieval-augmented generation (RAG): LangChain, LlamaIndex, or Haystack?

Haystack is the best choice for search-heavy RAG applications, LlamaIndex excels at indexing and querying large datasets, and LangChain is ideal for orchestrating complex LLM workflows that involve both retrieval and external integrations.

When should I use LangChain, LlamaIndex, or Hugging Face for an LLM project?

Use LangChain for complex workflows and multi-step logic, LlamaIndex for efficient data retrieval, and Hugging Face for accessing and fine-tuning pre-trained LLMs across a wide range of tasks.

Can LangChain be used with Python only?

LangChain offers official libraries for both Python and JavaScript. While the Python library was initially more mature, both libraries are now actively developed and maintained, offering comparable features and functionality. You can choose either language based on your preference and project requirements.

There are community-driven adaptations of LangChain for Java and Golang. Additionally, platforms like n8n provide a visual interface and pre-built nodes for LangChain.

Can you use LangChain with Ollama local LLMs?

Yes, LangChain provides official components for integrating with Ollama. This can be beneficial for various reasons, including privacy, security, and cost-effectiveness.

Can you use LangChain with Pinecone or other vector databases?

LangChain is designed to work seamlessly with Pinecone and other vector databases. Integrating a vector database like Pinecone with LangChain is a common practice for building efficient and scalable RAG applications.

What is LangSmith?

LangSmith is a platform designed specifically for debugging, testing, and evaluating LLM applications. It helps developers improve the performance and reliability of their LangChain projects by providing tools to trace workflow execution and evaluate accuracy.

Wrap up

In this article, we provided a comparative analysis of LlamaIndex and LangChain, two powerful frameworks for building RAG systems. We highlighted their strengths, limitations, and use cases, and also introduced n8n as a compelling alternative for those seeking a low-code, integration-heavy solution.

Choosing the right framework for your LLM application depends on your specific needs and priorities.

If you need a simple and efficient solution for data-centric tasks, LlamaIndex is a great choice. If you require greater flexibility and control for complex workflows, LangChain might be a better fit. And if you're looking for a broader automation platform that seamlessly integrates with LLMs, n8n offers a compelling alternative.

What’s next?

To further enhance your understanding of RAG and LLM application development, check out these resources on the n8n blog:

- LLM agents in 2025: Learn about the latest advances in LLM agents and how they are being used in enterprise environments.

- RAG chatbots: Learn how to build a RAG chatbot that can access and process information from your documents or knowledge base using n8n.

- Use n8n to integrate LangChain with local LLMs: Learn how to run LLMs like Deepseek locally on your own machine using n8n and Ollama.

- AI-agentic workflows: Learn how AI agents can be used to automate tasks and make decisions in n8n workflows.

- MLOps tools: Explore the different MLOps tools available and how they can be used to manage the entire lifecycle of your ML models.