We designed this guide for the risk-sensitive enterprises that need to start considering their AI adoption strategy to remain competitive, but have little tolerance for data integrity or privacy-related risks. It outlines a range of techniques, such as optimizing LLM accuracy, adding guardrails, running AI models locally in the workflow automation tool, scalability, and other considerations that can bring AI to an enterprise-grade standard.

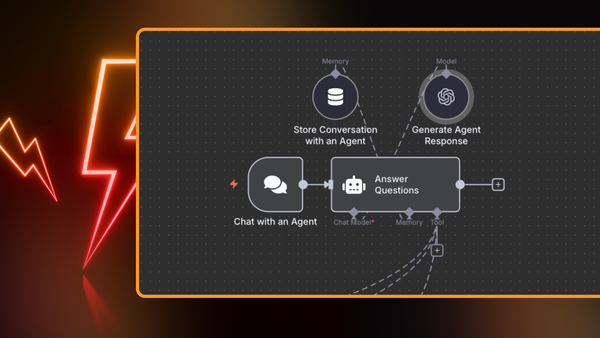

Some workflow automation tools - like n8n - can integrate AI within the automation logic. Executing AI as part of a wider customizable workflow is the best way for enterprises to mitigate the inherent risks of large language models, computer vision, and other AI algorithms.

Running AI as part of an automation workflow has three main considerations:

- The workflow automation tool can embed AI locally, or it can call external services.

- AI agents are just one component of the workflow, so additional logic can be defined for both the AI input and output.

- Automation logic can easily integrate AI with proprietary tools and legacy stack.

When workflow-based automation tools act as a wrapper around AI workloads such as large language models, organizations can address some of the most pressing AI-related challenges, which include:

- Data privacy - the AI models can either be run locally or in a trusted location. Additional privacy and data loss prevention mechanisms can be implemented before data is sent to the AI agent and after the response is generated.

- Insufficient talent and lack of skills to integrate AI - workflow-based automation tools offer intuitive graphical user interfaces that allow both developer and non-developer audiences to build automation logic that implements AI.

- Minimizing hallucinations - these refer to non-factual or nonsensical LLM responses, which must be subject to output controls that can detect errors or noncompliant responses.

- Sufficient and organized data for Small Language Models (SLM) or contextual responses - workflow automation tools can access disparate data sets and normalize data before for the AI model to generate responses.

Types of AI and ML models

Since 2023, the term AI has been used interchangeably with large language models (LLMs) and text-based generative AI, courtesy of the viral popularity of ChatGPT. However, it’s important to distinguish between different types of production-ready AI workloads

- Generative AI - consists of large and small language models, image generation, and video generation, among others.

- Large language models - Neural networks trained on vast text datasets, capable of understanding context, generating human-like text, code, and performing complex reasoning tasks (e.g., GPT-4, Claude, PaLM). For example, you can create a Multi-Agent PDF-to-Blog Content Generation

- Small/Specialized language models - these models are trained for specific tasks like text completion, sentiment analysis, or domain-specific generation, with significantly fewer parameters than LLMs.

- Image generation - Models that create new images from text descriptions or modify existing images (e.g., DALL-E, Midjourney, Stable Diffusion). Here is one use case for AI-based image generation.

- Video generation - AI systems that can create or edit video content from text prompts or existing footage (e.g., Runway Gen-2, Google Image Video, Meta's Make-A-Video).

- Speech generation - A model that converts text to audio, such as this workflow that uses the OpenAI TTS model.

- Computer vision - can be used for object detection and recognition in images/video, facial recognition and analysis. Here is one case for Automate Image Validation Tasks using AI Vision.

- Speech recognition - can be used to convert audio to text in near real-time and across multiple languages.

- Time series analysis - can be used for analyzing and forecasting trends, detecting seasonal patterns, identifying anomalies, and making predictions based on sequential, time-dependent data.

Based on the above, some achievable and production-ready use cases that implement AI today include agent support and Slack Bots, scheduling appointments, summarizing and chatting with internal PDFs, web scraping and webpage summarization, adding memory to AI agents, automating competitor research, image captioning, and customer support issue resolution using text classifier. A full library of AI templates can be found here.

Optimizing LLM Accuracy

Out of all AI models available today, large language models have the highest potential of delivering value for enterprises in the short-term. As such, this section of the article will focus on optimizing these for specific use cases and proactively addressing hallucination-related challenges.

LLM optimization typically consists of prompt engineering, retrieval-augmented generation (RAG), and model fine-tuning. These address different optimization use cases and are typically used together. We will also discuss guardrail mechanisms that protect against issues such as prompt injection and data leaks.

Source: https://platform.openai.com/docs/guides/optimizing-llm-accuracy/

In the above graphic, context optimization refers to the model lacking contextual knowledge if data was missing from the training set, its knowledge is out of date, or if it requires knowledge of proprietary information. LLM optimization refers to the consistency of the behavior, and must be considered if the model - given suitable prompts - is producing inconsistent results with incorrect formatting, the tone or style of speech is not correct, or the reasoning is not being followed consistently.

Prompt Engineering

Prompt engineering is the adjustment of the input request made to the LLM, and is often the only method needed for use cases like summarization, translation, and code generation where a zero-shot approach can reach production levels of accuracy and consistency.

Basic prompt engineering techniques look at aligning the input prompt to produce a desired output. Some guidelines recommend starting with a simple prompt and an expected output in mind, and then optimizing the prompt by adding context, instructions, or examples.

We recommend this OpenAI Guide on Prompt Engineering. At a high level, the guide suggests the following strategies:

- Provide reference text

- Split complex tasks into simpler subtasks

- Give the model time to "think"

- Use external tools

- Test changes systematically

You can also follow prompt engineering guidelines from Anthropic and Mistral.

Retrieval-Augmented Generation (RAG)

RAG is the process of fetching relevant content to provide additional context for an LLM before it generates an answer. It is used to give the model access to domain-specific context to solve a task.

For example, if you need an LLM to produce answers that contain statistics, it can do so by retrieving information from a relevant database. When users ask a question, the prompt is embedded and used to retrieve the most relevant content from the knowledge base. This is presented to the model, which answers the question.

While this is a great technique for referencing hard data, RAG also introduces a new dimension to consider - retrieval. If the LLM is supplied the wrong context, it cannot answer correctly, and if it is supplied too much irrelevant context, it may increase hallucinations.

Retrieval itself must be optimized, which consists of tuning the search to return the right results, tuning the search to include less noise, and providing more information in each retrieved result. Libraries like LlamaIndex and LangChain are useful tools to optimize RAG.

This presentation offers a good overview of retrieval-augmented generation.

Fine-Tuning

Fine-tuning is the process of continuing the training process of the LLM on a smaller, domain-specific dataset to optimize it for the specific task. Fine-tuning is typically performed to improve model accuracy on a specific task by providing many examples of that task being performed correctly, and to improve model efficiency by achieving the same accuracy for fewer tokens or by using a smaller model.

The most important step in the fine-tuning process is preparing a dataset of training examples, which contains clean, labeled, and unbiased data.

Guardrails

Guardrails refer to controlling the LLM input and output controls that detect, quantify and mitigate the presence of specific types of risks, such as prompt injection. There’s an eponymous open source project that provides a Python framework for implementing these additional protection mechanisms.

These can be used to check whether the generated text is toxic, that responses are provided in a neutral or positive tone, that responses do not contain any financial advice in line with FINRA guidelines, to prevent user's personal data from being leaked in the response, to prevent mentions of competitors and replace with alternate phrasing, and other similar use cases.

Deployment Models for AI-Enhanced Workflow Automation

There are two options to run AI models within a workflow automation platform, namely to run the AI model natively in the tool, or to run the AI model as a standalone service and make requests over the network.

AI Natively Integrated in the workflow automation tool

- Pros: No dependency on an external AI service, no data leaves the tool, no network-induced latency, and no per-request cost incurred.

- Cons: More complex setup and configuration, harder to change models.

Standalone AI model

- Pros: Easy setup and configuration, wide choice of models.

- Cons: Privacy and data loss concerns as data leaves the automation tool, induces network latency, incurs per-request cost.

Both of these components - the AI model and the workflow tool - can either be deployed in an as-a-service model, where it is run and managed by the vendor or third party, or can be self-hosted.

Out of the deployment models described above, the one that is uncommon and is worth further exploration is the self-hosted version of a workflow automation tool with AI natively integrated. We describe this in further detail below.

Self-Hosting Workflow Automation Tools with Integrated AI

This model offers enterprises more control over how the AI model is run and managed, but entails a more hands-on approach compared to sending API requests to ChatGPT. Some of the difficulties in running AI locally include the choice of models, vector stores, and frameworks and configuring these components to work together.

To address this high entry barrier, n8n has released a Self-hosted AI Starter Kit since August 2024. It consists of an open-source Docker Compose template that can initialize a local AI and low-code development environment. It includes the n8n workflow automation platform and a selection of best-in-class local AI tools, designed to be the fastest way to start building self-hosted AI workflows. As a virtual container-based appliance, it can be deployed both on-premises and in customer-managed cloud environments.

The kit uses Ollama as the API to interact with language models locally, Qdrant as a vector database, and PostgreSQL as a relational database. It also contains preconfigured AI workflow templates.

The starter kit also provides networking configurations to deploy locally or in cloud instances such as Digital Ocean and runpod.io. The starter kit is also designed to help organizations access local files by creating a shared folder which is mounted to the n8n container and allows n8n to access files on disk.

Integrating AI in Automation workflows

AI-based workflows typically focus on the capabilities delivered by the AI model, such as interpreting natural language commands, object recognition and categorization, or unstructured data analysis. AI-based workflows have custom logic defined before the AI model, such as the conditions when the workflow is triggered and fetching the right data, and after the AI model, such as validating responses and preventing data leaks.

When considering the time-to-value associated with automation tools that implement AI, organizations need to evaluate the vendor’s portfolio of out-of-the-box workflows and the workflow designer itself.

Ready-built workflows - the vendor can provide a marketplace or library of pre-built, pre-configured, and pre-validated AI templates that organizations can deploy with minimal configuration. These workflows need to be documented and modular such that any necessary changes dictated by the customer’s technology stack can be easily implemented. These templates can either be developed directly by the vendor, or they can open the marketplace to allow members of the community to publish workflows. As an example, n8n offers a library of >160 AI-based workflows, many of which are free to use.

Workflow designer - to support customers in writing new AI-based workflows, the vendor must provide adequate documentation that explains how the AI functionality is implemented in the automation logic. Further, it needs to provide development and staging environments, where customers can test the workflows before deploying these in production. Lastly, validation tools can identify any misconfigurations and security concerns in the playbooks, such as data which is not the expected format or calls to external services on unsecured channels.

Running an AI Workflow Tool in Production

Once the AI-based automation workflows have been designed, you need to consider how the tool will behave in production with respect to inference engines, scalability, monitoring, troubleshooting, authorization, and access.

Inference Engine

To run LLMs in production environments, you can choose between a range of inference engines. These run inference on trained machine learning or deep learning models across frameworks (PyTorch, Scikit-Learn,TensorFlow, etc.) or processors (GPU, CPU, etc). Most inference engines are open-source software that can standardize AI model deployment and execution.

Some of the most widely deployed inference engines include:

Scalability

Scalability considerations for running AI-enhanced automation workflows includes both the AI model’s scalability and the workflow tool’s.

For the workflow tool, scalability can consist of

- Dynamic scaling: This involves adding more resources dynamically as the amount of data ingested increases. While cloud-based solutions can provision these resources automatically, the self-hosted equivalent involves adding more instances for horizontal scalability with minimal disruption or additional configuration.

- Absolute scale limits: These can be hard limits such as the number of concurrent workflows, maximum file sizes, maximum number of actions per playbook.

- Support considerations: Smaller vendors with a scalable product may struggle to provide in-house enterprise-grade support for very large deployments, which includes support tickets and incident management. Furthermore, they may not have yet developed partnerships with third-party services providers that can help with large operations.

- Pricing considerations: Some vendor pricing mechanisms may lock customers out of large or growing deployments, which forces organizations to compromise on the types of data they ingest, store, or analyze.

For AI models hosted in the cloud by third parties (i.e. ChatGPT), main scalability concerns revolve around pricing, where the cost of large numbers of requests outweigh any efficiencies gained by implementing AI.

For self-hosted AI models scalability, considerations include maximum data input volume, maximum output, rate of errors, and mean time to get an output. Compute infrastructure is the main factor for scaling AI models, where the underlying hardware must have enough processing power to support requests and also support for horizontal scaling. When choosing to host the model in the cloud, horizontal scalability is not generally a concern, but with on-premises deployments there is a hard limit on the number of servers available at any given time. The scale of deployment directly correlates with the model's size and complexity, with smaller models (1-3 billion parameters) requiring substantially less compute infrastructure compared to large language models with 30-70 billion parameters.

Self-hosted models also have some control over response times. Model choice once again may have an impact, where small models can respond in 100-500 milliseconds, while larger models might require 1-5 seconds per inference. This can further be tuned with techniques such as model quantization, distributed inference, batch processing, intelligent caching, and hardware acceleration.

In instances such as n8n’s AI Starter Kit, using Kubernetes to orchestrate the containers running the workflow tool and large language model can help deliver horizontal scalability based on request demand. Besides horizontal scalability, using Kubernetes can also help with load balancing and request handling.

GPU Requirements for Self-hosting

To calculate how much GPU memory is required to run an LLM on-premises, you can use the following formula:

Source: https://ksingh7.medium.com/calculate-how-much-gpu-memory-you-need-to-serve-any-llm-67301a844f21

Where:

- M = GPU memory in GB

- P = number of parameters

- 4B = 4 bytes per parameter

- 32 = 32 bits for the 4 bytes

- Q = number of bits used for loading the model

- 1.2 = 20% overhead allocation

As an example, applying this formula for Llama-3.1-70b, which has 70 Billion Parameters, we get a total of 168GB or GPU memory. This would require two NVIDIA A-100 80GB memory models to run.

In instances where computational power for hosting AI is limited, you can reduce the compute and memory costs using quantization. It is a technique that enables the AI model to operate with less memory storage, consume less energy (in theory), and perform operations like matrix multiplication faster. It does so by representing the weights and activations with low-precision data types like 8-bit integers instead of the usual 32-bit floating point.

Applying this quantization technique to the above calculation for Llama 70B, using float16 precision instead of float32 would cut the memory requirement in half.

Authorization and Authentication

As AI-enhanced workflows can have multiple business owners and access various data resources, authorization and role based access controls can help segregate access to reduce privacy concerns and attack surface.

User access controls refers to how permissions are granted and managed to administrators, developers, and non-developers that have access to the workflow designer.

Service access controls refers to how the workflow automation tool can access other services and databases, such as providing API keys or JWT tokens, allowing access only through specific ports, requiring encryption, and the like.

Monitoring and Error Handling

Workflow automation tools can implement monitoring capabilities for each step or action that takes place within the workflow, including any AI agents. These can report on execution times, errors, API codes, and generate logs.

To monitor the performance of AI agents, tools can track performance against:

- Latency - How quickly an LLM can provide a response after receiving an input. Faster response times enhance user satisfaction and engagement.

- Throughput - The number of tasks or queries an LLM can handle within a given time frame, for assessing the model's capability to serve multiple requests simultaneously. This is important for scalability and performance in production environments.

- Resource Utilization - How efficiently an LLM uses computational resources, such as CPU and GPU memory. Optimal resource utilization ensures that the model runs efficiently, enabling cost-effective scaling and sustainability in deployment.

Based on the reported metrics, organizations can implement multiple layers of validation, including:

- Input preprocessing and sanitization

- Confidence threshold monitoring

- Fallback mechanisms for low-confidence predictions, such as implementing human agents in the loop

n8n for Building AI-based Workflows

Secure and privacy-first AI is a core strategy for n8n, so we have developed a range of features that make use of AI. At n8n, we use AI-enhanced workflows internally for a variety of use cases, which include:

- Battlecard bot

- Our own AI assistant for errors

- Template reviews

- Classifying and assigning bugs

- Classifying forum posts

We are also building a library of community-built AI content, which you can explore here. We encourage members of the community to become creators and submit their own templates.