Ever wished for a chatbot that could answer specific questions about your data or documentation, instead of just hallucinating generic responses? Build one that actually knows your data with Retrieval Augmented Generation (RAG)!

In this blog post, we'll examine how Retrieval Augmented Generation (RAG) allows you to build specialized chatbots that go beyond the limitations of typical chatbot interactions. Instead of relying on generic responses, RAG chatbots utilize external knowledge sources to deliver precise and informative answers to complex queries.

We'll break down the core concepts of RAG and look at some practical RAG workflow examples. Plus, this isn't just theory - we'll demonstrate how to implement RAG using n8n, a workflow automation tool.

By the end of this article, you'll have the knowledge and tools to construct your own RAG chatbots!

What is the RAG in the chatbot?

Retrieval Augmented Generation (RAG) in chatbots is a powerful technique that combines the strengths of large language models (LLMs) with external knowledge sources to generate more relevant, and most importantly, accurate responses.

RAG is particularly useful in scenarios where LLMs need to access and utilize information that was not included in their initial training data, such as when answering questions about specific domains, internal documents, or data that is not publicly available.

AI chatbots can use RAG to access and process information from a variety of sources, including unstructured data like text documents, web pages, and social media posts, as well as structured data like databases and knowledge graphs. This allows them to:

- provide more comprehensive and informative responses;

- personalize the user experience;

- stay up-to-date with the latest information;

- provide responses based on internal documents.

RAG chatbot examples

Now that we’ve explored the fundamentals of RAG, let’s dive into some examples to inspire your creativity.

We’ll demonstrate how to bring these ideas to life with n8n, a powerful workflow automation tool that streamlines the integration of external knowledge sources into RAG workflows. Plus, we’ll highlight why n8n is the ideal platform for building efficient, scalable, and customizable RAG chatbots.

The following scenarios are just a glimpse of what's possible when you combine the retrieval power of semantic search with the generative capabilities of LLMs.

Internal knowledge base chatbot

A workflow that connects to internal company resources, with a focus on documents stored in Google Drive. It leverages a mechanism to automatically update a Pinecone vector database whenever new documents are added or existing ones are modified in designated Google Drive folders. When a user asks a question, the workflow uses a combination of nodes (including semantic search with Pinecone and an LLM) to retrieve relevant information from the indexed documents and generate a response.

An employee asks the chatbot, "What is the company's policy on remote work?". The chatbot accesses the vector store, retrieves relevant documents and generates a summary of the policy.

API documentation chatbot

This workflow connects to API documentation, code examples, and developer documentation. It uses a "Function node" to parse API specifications and extract relevant information. When a developer asks a question about the API, the workflow retrieves relevant documentation and code examples. An LLM is used to generate concise explanations, provide usage examples tailored to the developer's programming language, and even generate code snippets for common API calls.

A developer asks the chatbot, "How do I authenticate a user with the OAuth 2.0 protocol in my Node.js application?" The chatbot retrieves the relevant API documentation and code examples, then generates a Node.js code snippet demonstrating the authentication process, along with explanations and security considerations.

Later in this article, we will walk through the steps of creating this API Documentation Chatbot workflow in detail, demonstrating how to connect to a real-world API specification, integrate with a vector database, and build a chat interface using n8n's powerful features!

Financial analyst chatbot

This workflow integrates with financial data providers (e.g., Bloomberg, Refinitiv). It uses "HTTP Request" nodes to fetch real-time market data, historical stock prices, and company financial reports. When a user asks a financial question, the workflow retrieves relevant data and uses an LLM to generate insightful analyses, risk assessments, or investment recommendations.

An analyst asks the chatbot, "What is the current market sentiment towards renewable energy companies, and how does it compare to the previous quarter?" The chatbot analyzes news articles, social media sentiment, and market data to provide a comprehensive answer, potentially including charts and graphs generated with other n8n nodes.

How to build a RAG chatbot with n8n?

Let's turn theory into practice by building an RAG chatbot with n8n's powerful visual workflow automation. We'll be creating an API documentation chatbot for the GitHub API, demonstrating how n8n simplifies the process of connecting to data sources, vector databases, and LLMs. This hands-on example will guide you through each step, from data extraction and indexing to creating a user-friendly chat interface.

Prerequisites

Before we start building, make sure you have the following set up:

- n8n account: You'll need an n8n account to create and run workflows. If you don't have one already, you can sign up for an n8n cloud account or self-host n8n.

- OpenAI account and API key: We'll be using OpenAI's models for generating embeddings and responses. You'll need an OpenAI account and an API key. You can find this documentation page about setting up OpenAI credentials in n8n.

- Pinecone account and API key: We'll use Pinecone as our vector database to store and retrieve API documentation embeddings. You can create a free account on the Pinecone website and check out the documentation page on how to set up Pinecone credentials in n8n.

Basic familiarity with vector databases: While not strictly required, understanding the basic concepts of vector databases will be helpful. You can check out this n8n documentation for a primer: using Vector Databases in n8n.

Once you have these prerequisites in place, you're ready to start building your RAG chatbot! You can follow along by importing the workflow:

Step 1: Set up data source and content extraction

This workflow consists of two parts. The first part handles grabbing the data and indexing it into Pinecone vector DB and the second part will handle the AI chatbot.

Using the HTTP Request node we are fetching the OpenAPI 3.0 specification from GitHub. We simply use this GitHub raw URL to get the JSON file for the repository. Leave all other options at their default settings.

This node makes a GET request to the specified URL, which points to the raw JSON representation of the GitHub API specification. The response is the entire OpenAPI specification file. The file will be used in the next step.

Step 2: Generate embeddings

In this important step, we transform the text chunks from the API documentation into numerical vector representations known as embeddings. These embeddings capture the semantic meaning of each text chunk, allowing us to perform similarity searches later on. We can do this using the Pinecone Vector Store node.

Then we need to connect an Embeddings OpenAI node to the Pinecone Vector Store node.

This node takes the text chunks and generates their corresponding vector embeddings using OpenAI's embedding model. Use your OpenAI credentials and choose text-embedding-3-small as the Model.

We also need to connect the Default Data Loader node and connect the Recursive Character Text Splitter node to that. You can leave everything as default.

Step 3: Save documents and embeddings to the Pinecone vector store

You can now run this part of the workflow. It might take a while to generate all the embeddings and save them to Pinecone, especially if the API specification file is large. After that is done, your Pinecone dashboard should show some data in that vector store:

Step 4: Build the core chatbot logic

With our API specification indexed in the vector database, we can now set up the querying and response generation part of the workflow. This involves receiving the user's query, finding relevant documents in the vector store, and crafting a response using an LLM.

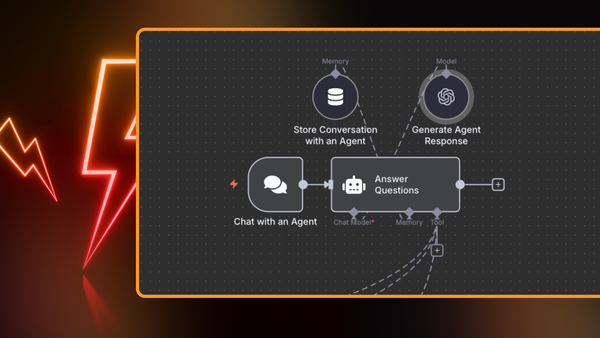

Use the Chat Trigger node to receive user input. This node acts as the entry point for user interaction, triggering the workflow when a new chat message is received. Leave all settings as default for now.

We then connect the Chat Trigger node to an AI Agent node. This node orchestrates the retrieval and generation steps. It receives the user’s question and the relevant documents, and calls upon the LLM to produce an answer.

We can select the Agent type as Tools Agent. We can also set a System Message,which will be combined with the user's input to create a prompt for the LLM. Here's a simple example of a system message prompt:

You are a helpful assistant providing information about the GitHub API and how to use it based on the OpenAPI V3 specifications.

Next, connect the AI Agent node to the OpenAI Chat Model node. This node is responsible for taking the user's query, along with the retrieved text chunks, and using an OpenAI LLM to generate a final, comprehensive answer.

Select your OpenAI credentials, and from the Model dropdown, choose the efficient gpt-4o-mini model.

Connect the Window Buffer Memory node to the AI Agent node. This node provides short-term memory for the conversation, enabling the LLM to answer follow-up questions and use previous prompts and answers to improve its responses. You can leave all settings as default here.

Step 5: Retrieve information using the vector store tool

Now comes the crucial part that transforms this into a RAG chatbot instead of a regular AI chatbot!

Connect a Vector Store Tool node to the AI Agent node. The node uses the embedding of the user’s query to perform a similarity search against embeddings of the indexed API specification chunks.

Give this tool a descriptive name and a description so the LLM understands when to use it. For this example, you can use the following description:

Use this tool to get information about the GitHub API. This database contains OpenAPI v3 specifications.

We can also limit the number of results that we retrieve from the vector store to the 4 most relevant for the user’s query.

Then, connect the Pinecone Vector Store node, this time set the Operation Mode as Retrieve Documents (For Agent/Chain) and connect the same Embeddings OpenAI node that we used earlier, ensuring the same text-embedding-3-large model is selected. This setup will generate embeddings for the user's query, allowing for a comparison against all the embeddings in your vector store.

Finally, connect another OpenAI Chat Model node. This node will summarize the retrieved chunks from the database, providing context for the final response.

You can use the same gpt-4o-mini model here as well.

Step 6: Test your RAG chatbot

And there you have it!

You've now configured the core components of your RAG-powered GitHub API chatbot.

To test it out, simply click the Chat button located at the bottom of the n8n editor. This will open the chat interface where you can start interacting with your bot. As a test question, try asking: "How do I create a GitHub App from a manifest?". You'll be amazed to see how the chatbot retrieves relevant information from the GitHub API specification and provides you with a detailed, step-by-step solution using the API. This demonstrates the power of RAG and how n8n makes it easy to build sophisticated AI-powered applications.

Wrap up

In this article, we covered Retrieval Augmented Generation (RAG) and how it enables you to build specialized chatbots that can tap into specific knowledge sources, providing more accurate and relevant answers than traditional chatbots.

You've seen how n8n’s intuitive, visual interface and pre-built integrations with various LLMs and databases make it an ideal platform for building and deploying RAG workflows. You can easily connect all the necessary components, experiment with different configurations, and scale your chatbot as your needs grow.

What’s next?

Okay, so you understand RAG and you've seen how n8n can put it all together. Now it's time to get practical. Here's what you should focus on next:

- Once you have the basic chatbot working, start experimenting. Try different embedding models, fine-tune your prompts, or test different retrieval strategies to improve performance.

- n8n isn't tied to a single LLM. You can use nodes for OpenAI, Ollama, or integrate with LangChain. Each LLM has its strengths and weaknesses, so test them out to find the best fit. Check out this article to learn more about integrating local Ollama models into your workflows.

- Check out YouTube tutorials from the community to deepen your understanding and see n8n in action:

- Get inspired by other AI workflows created by the n8n community.