Your customer service chatbot handles basic inquiries. Your data analysis agent processes reports. Your scheduling system books meetings.

But oftentimes, your agent needs to do all three things at once.

The traditional approach of cramming more tools and writing more complex system prompts isn’t ideal. As LLM token usage increases, you need the most capable (expensive) models to handle complexity, and there’s a practical limit to how many tasks a single agent can effectively manage.

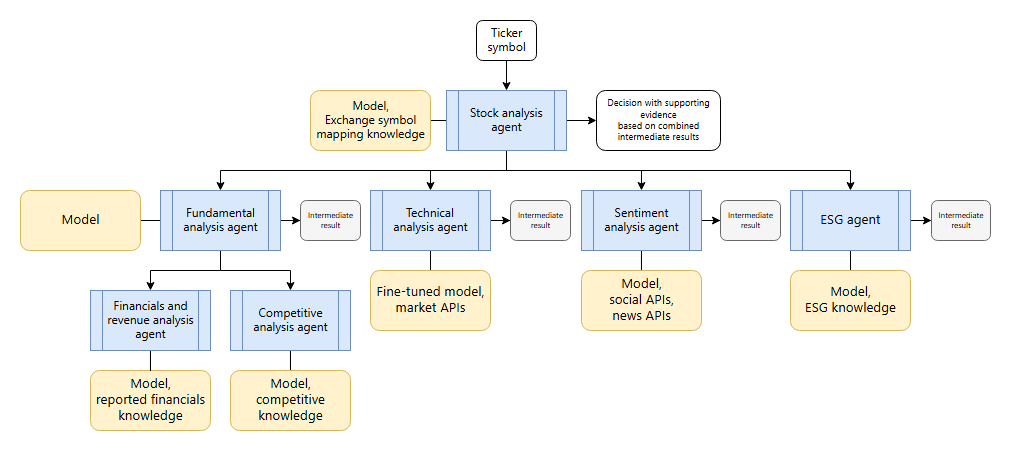

An alternative approach is splitting large agents into smaller, specialized ones. Instead of one overloaded agent, you coordinate multiple focused agents - each expert in their domain. They work together, sharing context and handing off tasks as needed.

This coordination requires proper tooling. AI agent orchestration frameworks manage communication between agents, maintain shared state across workflows, and handle task delegation between specialized components.

In this guide, we examine the essential components that AI agent orchestration frameworks should provide, then review 11 frameworks that approach multi-agent coordination differently - from visual workflow builders to enterprise-grade managed platforms.

- What are AI agent orchestration frameworks?

- 11 AI agent orchestration frameworks of 2025

- Benefits of using AI agent orchestration frameworks

- Wrap up

- What's next?

What are AI agent orchestration frameworks?

AI agent orchestration frameworks coordinate multiple specialized agents to accomplish complex workflows that single agents struggle with.

The key difference from traditional AI tools lies in coordination complexity. Instead of managing one conversation with one model, you're managing state across multiple agents, handling handoffs between specialized systems, and ensuring context doesn't get lost as tasks flow between agents.

Essential AI agent orchestration components

Effective frameworks provide five core capabilities:

- State management: Persistent memory that survives across agent interactions. When your data analysis agent finishes processing and hands off to your scheduling agent, the context needs to transfer seamlessly.

- Communication protocols: Standardized ways for agents to talk to each other. Whether through structured handoffs, shared chat threads, or event-driven messages.

- Orchestration patterns: Different coordination approaches - sequential pipelines for predictable workflows, parallel execution for speed, or hierarchical structures where supervisor agents manage worker teams.

- Tool integration: Connecting agents to external systems, APIs, and data sources while managing permissions and error handling across the chain.

- Error recovery: When one agent fails or produces unexpected results, the framework needs mechanisms to retry, route to alternative agents, or gracefully degrade the workflow.

These components determine whether your multi-agent system works reliably in production or becomes a complex debugging nightmare.

11 AI agent orchestration frameworks of 2025

We've organized our analysis into three categories that reflect how the market has evolved:

- Visual and low-code tools (n8n, Flowise, Zapier Agents) come first – these platforms let business teams and developers build agent coordination through graphical interfaces while maintaining technical flexibility.

- Code-first SDKs (LangGraph, CrewAI, OpenAI Agents SDK, Google ADK, MS Semantic Kernel Agent Framework) follow next – code-first frameworks that give technical teams precise control over agent behavior, state management, and communication patterns.

- Enterprise infrastructure platforms (Amazon Bedrock Agents, Vertex AI Agent Builder and Azure AI Agent Service) conclude our analysis. Here we see an interesting trend: major cloud providers now offer both open-source SDKs for development AND managed infrastructure services for deployment. We've separated Google's and Microsoft's SDK offerings from their infrastructure services to illustrate this dual-approach strategy.

| Framework | Type | Key Features | Prog. Language |

Open source? |

|---|---|---|---|---|

| n8n | Low-code tool |

1000+ integrations, Agent-to-Agent workflows, MCP support, custom JavaScript |

JavaScript TypeScript |

Fair-code |

| Flowise | Low-code tool |

Based on LangChain, visual multi-agent builder, RAG capabilities |

JavaScript | ✅ |

| Zapier Agents |

No-code tool |

8000+ app integrations, Agent web browsing tool, business automation focus |

N/A | ❌ |

| LangGraph | SDK | Graph-based state management, LangChain ecosystem, human-in-the-loop |

Python | ✅ |

| CrewAI | SDK | Role-based teams, built from scratch (no LangChain), autonomous collaboration |

Python | ✅ |

| OpenAI AgentKit |

Low-code SDK |

Visual Agent Builder, ChatKit managed hosting, Agents SDK export, OpenAI-only models |

Python TypeScript |

❌ |

| Amazon Bedrock Agents |

Infra | Fully managed, multi-model support (Nova, Claude), AWS ecosystem |

N/A | ❌ |

| Google Agent Dev. Kit |

SDK | Vertex AI integration, A2A protocol, Gemini models, enterprise deployment |

Python | ✅ |

| Vertex AI Agent Builder |

Infra | Google Cloud native, compatible with OSS frameworks (LangChain, AG2 or Crew.ai) |

N/A | ❌ |

| Microsoft Semantic Kernel |

SDK | Multi-language, skill-based architecture (functions & plugins), Azure integration |

C# Python Java |

✅ |

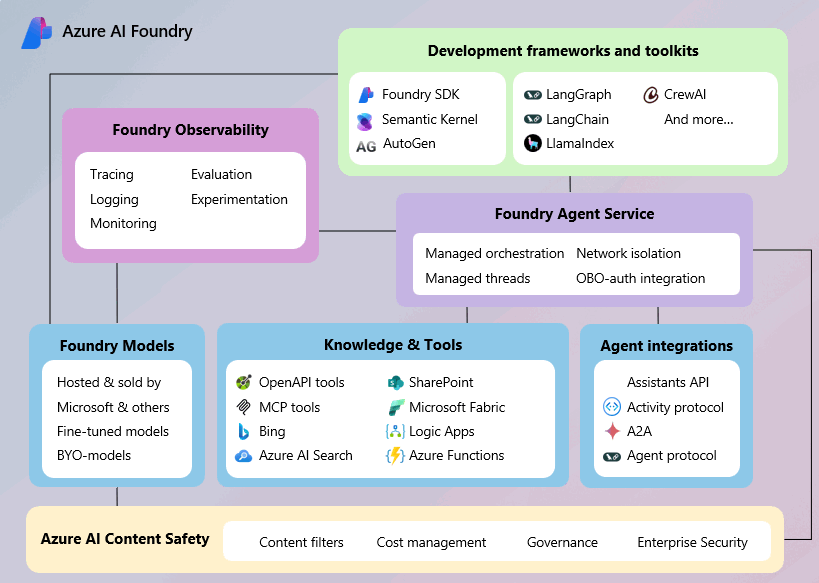

| Azure AI Agent Service |

Infra | Fully managed, MS 365 integration, enterprise security & compliance |

N/A | ❌ |

n8n

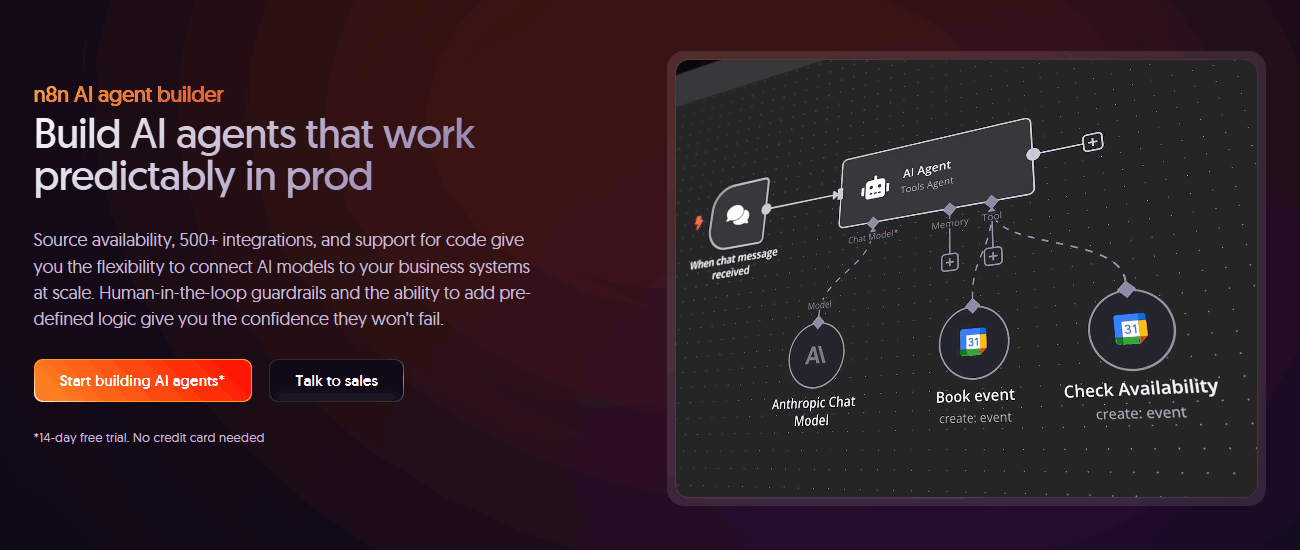

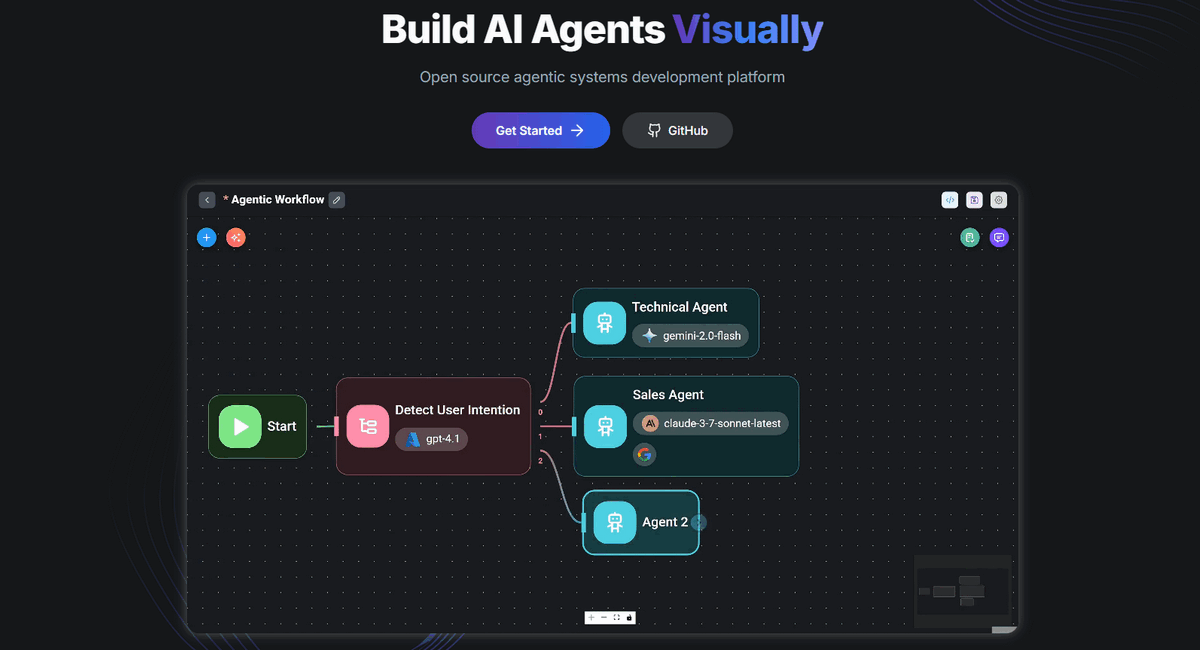

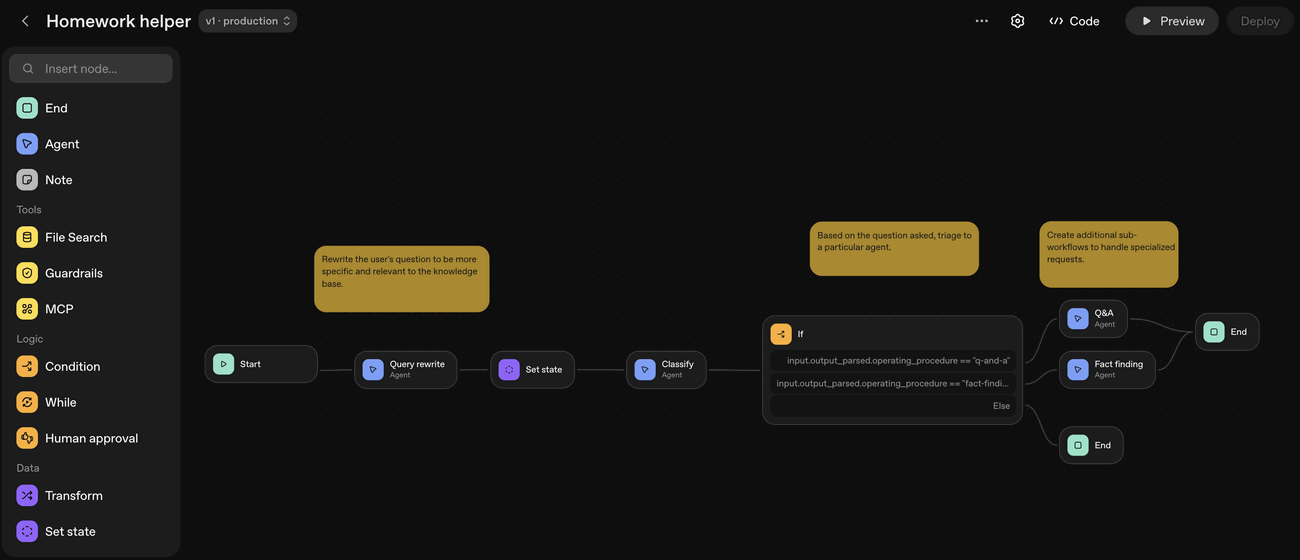

When to use: сhoose n8n for its unique mix of low-code visual building, extensive integrations, and developer flexibility for custom code.

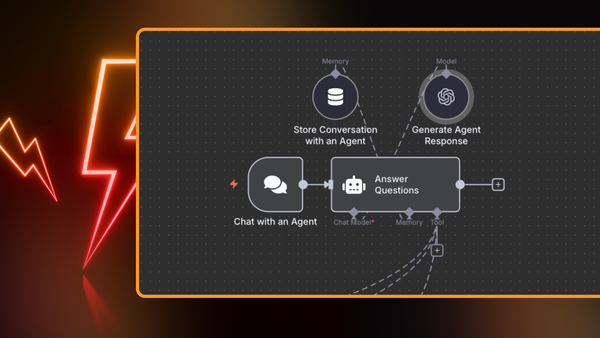

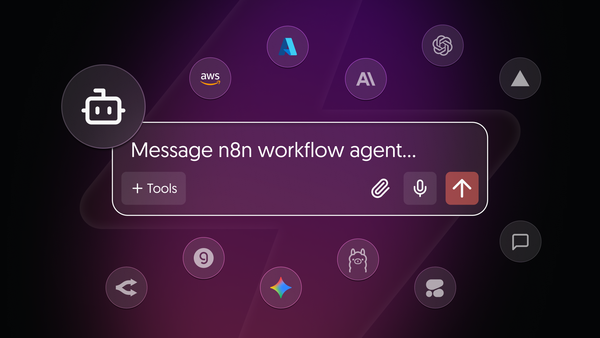

n8n is a source-available AI workflow automation platform that combines agentic capabilities with traditional business process automation. It offers a unique hybrid approach where users can build sophisticated AI workflows visually while maintaining the ability to add custom JavaScript code when needed. The platform serves as a bridge between no-code simplicity and developer-grade flexibility.

- Visual AI agent builder: drag-and-drop interface for building multi-agent systems with native AI nodes including tools, memory, structured outputs and more.

- Agent-to-Agent workflows: connect Agents sequentially, in parallel or use Agent Tool node, for hierarchical task delegation. Build agents from scratch or via LangChain nodes drop-ins.

- Extensive integration: 1000+ pre-built integrations with business tools, APIs, and services, approved partner nodes and thousands of community-built integrations.

- Custom code support: JavaScript integration through a general Code node and a specialized LangChain Code node for advanced customization.

- Memory management: multiple memory options including Mongodb, Redis and PostgreSQL Chat as well as Simple Buffer Memory. Flexible context management with Memory Manager node and dynamic session keys.

- Debugging features: use Evals to thoroughly test changes in agents, add human-in-the-loop nodes for manual feedback at critical checkpoints, connect n8n with LangSmith and LangFuse to see the detailed traces.

- Model flexibility: support for OpenAI, Anthropic, Azure, DeepSeek, Mistral, OpenRouter, and local models via Ollama.

- MCP Integration: native support for Model Context Protocol as both client and server.

- Self-Hosting options: free community edition with unlimited executions or Business / Enterprise on-prem editions with extended features.

Pricing

- Cloud tiers starting from €20/month ($24/month) - 2,500 workflow executions

- Business and Enterprise: extended team collaboration features, advanced security, SSO, audit logs

- Free Community edition

Flowise

When to use: opt for Flowise to rapidly prototype and deploy AI agents via its drag-and-drop interface built on top of LangChain.

Flowise is an open-source, low-code platform that provides visual building blocks for creating AI agents, multi-agent systems, and simple LLM workflows. Built on top of LangChain and LlamaIndex, it offers three main builders: Assistant (beginner-friendly), Chatflow (single-agent systems), and Agentflow (multi-agent orchestration).

- Three building approaches: assistant for beginners, Chatflow for single agents, Agentflow for complex multi-agent systems.

- Multi-Agent systems: with Agentflow V2 users can connect agents in multiple ways

- RAG capabilities: built-in support for document processing, vector databases, and knowledge retrieval.

- LLM support: integration with OpenAI, Claude, Cohere, and other providers

- API & SDK access: REST APIs, JavaScript / Python SDKs, and embedded chat widgets.

- Deployment flexibility: self-hosted options and cloud deployment with enterprise features.

- Evaluation system: built-in datasets and evaluators.

- MCP integration: Model Context Protocol client / server nodes.

Pricing

- Cloud tiers starting from $35/month - unlimited flows, 10,000 predictions/month, 1GB storage

- Enterprise: contact for pricing - on-premise deployment, SSO, SAML, audit logs

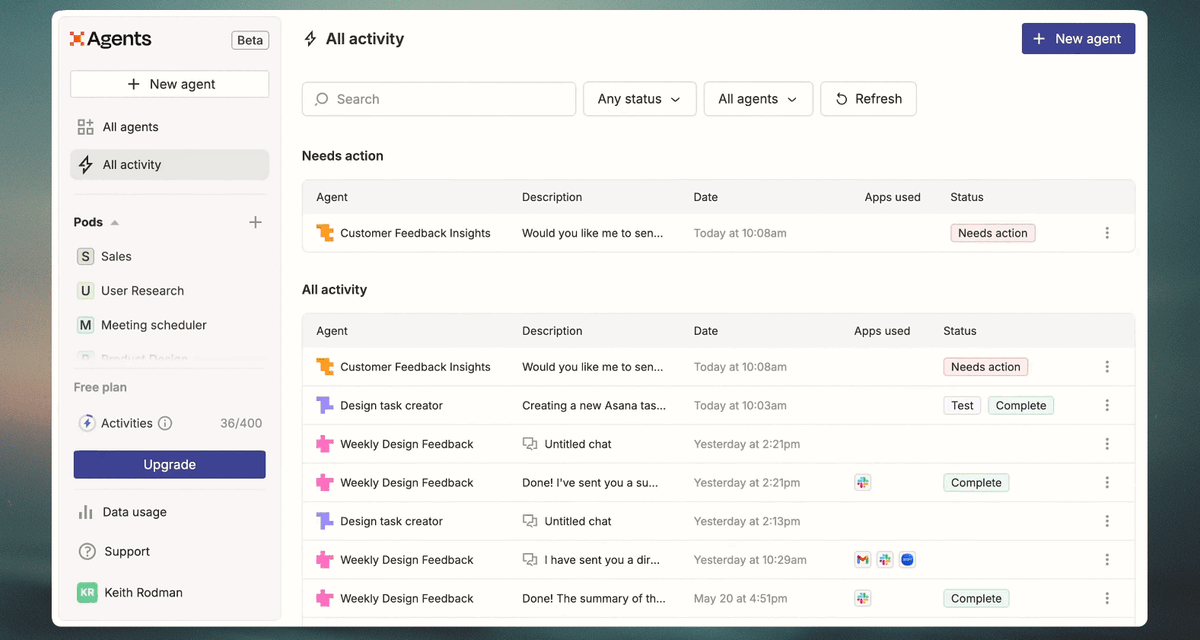

Zapier Agents

When to use: use Zapier Agents to add simple, AI-powered decision-making to your existing business automations across its 8,000+ app ecosystem.

Zapier Agents extend Zapier's automation platform by adding AI-powered decision-making capabilities to traditional workflow automations. Agents can browse the web, analyze data, and interact with the vast Zapier ecosystem to automate complex business processes.

- AI-Powered automation: creating agents in Zapier is straightforward, but comes with significant limitations. Users need to access a separate UI for creating agents. Unlike other visual builders, Zapier allows configuring the agents mostly via prompting and a few drop-downs, such as a limited selection of tools or a simple knowledge base.

- Built-in tools: agents have access only to a few selected tools, such as Web Browsing or attached documents for the agent's knowledge base.

- 8000+ app integrations: explore Zapier's extensive integration ecosystem.

- Chrome extension: direct interaction with agents through a browser extension

- Team collaboration: shared agent pools for Team accounts.

Pricing

- Agents are priced separately from the main Zapier platform: $50/month for 1,500 activities/month, up to 40 activities per run.

- Free limited tier: 400 activities/month, up to 10 activities per run

- Advanced: custom pricing with a custom number of activities per month

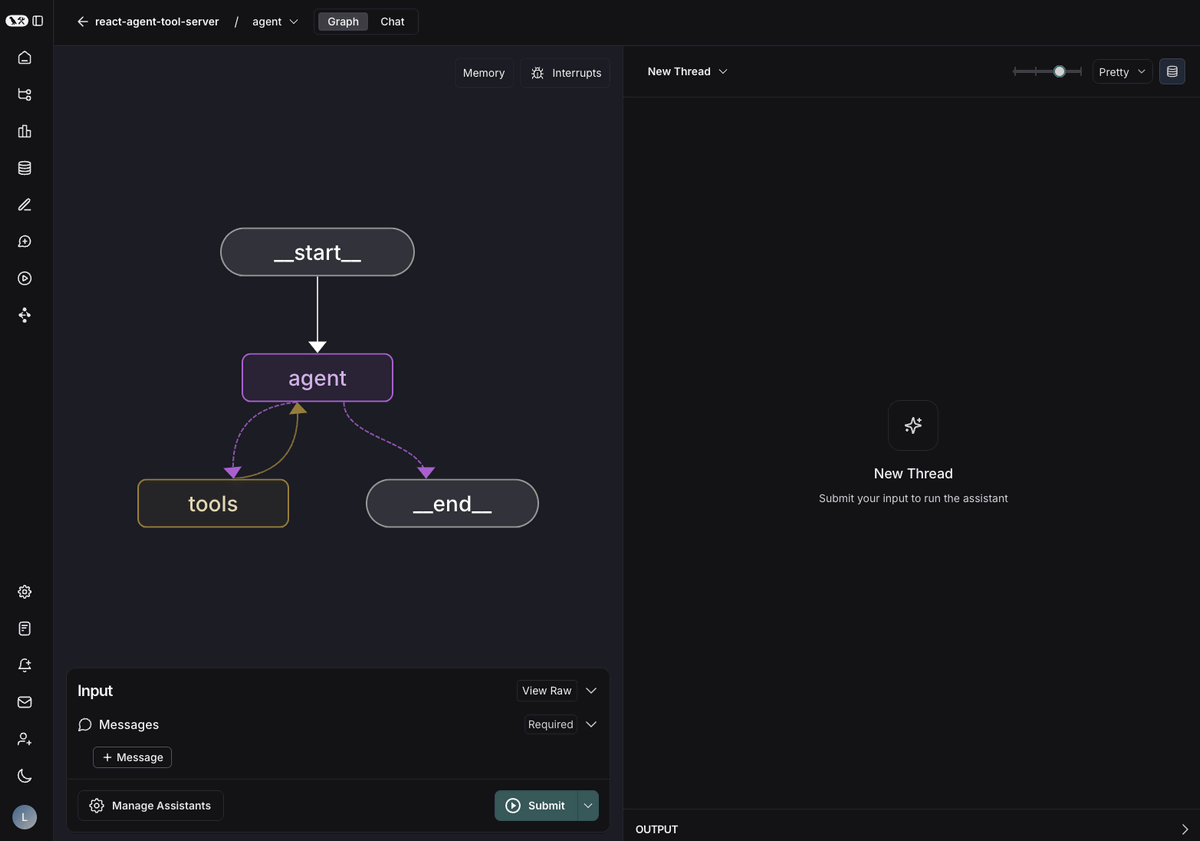

LangGraph

When to use: select LangGraph when you are a developer needing precise, stateful control over complex agent workflows using its graph-based architecture.

LangGraph is a low-level library for creating stateful, graph-based agent workflows that extends LangChain. It provides fine-grained control over agent behavior through state machines and directed graphs, making it suitable for complex applications designed for live deployment and requiring explicit workflow control. Unlike n8n and Flowise, which offer convenient GUI over LangChain modules, LangGraph requires deep programming knowledge and familiarity with LangChain concepts.

- Graph-based architecture: model agent workflows as directed graphs with nodes (Python functions) and edges (decision logic).

- State management: central persistence layer with checkpointing and memory across conversations.

- Human-in-the-loop: built-in interruption and resumption capabilities for human intervention.

- LangGraph studio: specialized IDE for visualization, debugging, and real-time agent interaction.

- Streaming support: real-time streaming of outputs and intermediate steps.

- Multi-agent orchestration: hierarchical, collaborative, and handoff patterns for agent coordination.

- Production features: background runs, burst handling, interrupt management.

- LangChain integration: deep integration with other components of the LangChain ecosystem, such as LangSmith for tracing, evaluation, and monitoring.

Pricing

- Plus plan: starts from $39 / month and offers both LangSmith and LangGraph Platform cloud deployment

- Enterprise plan: custom pricing - all deployment options, dedicated support

- Free Self-Hosted Lite deployment option with limits

- Usage-based pricing beyond the paid tier quotas

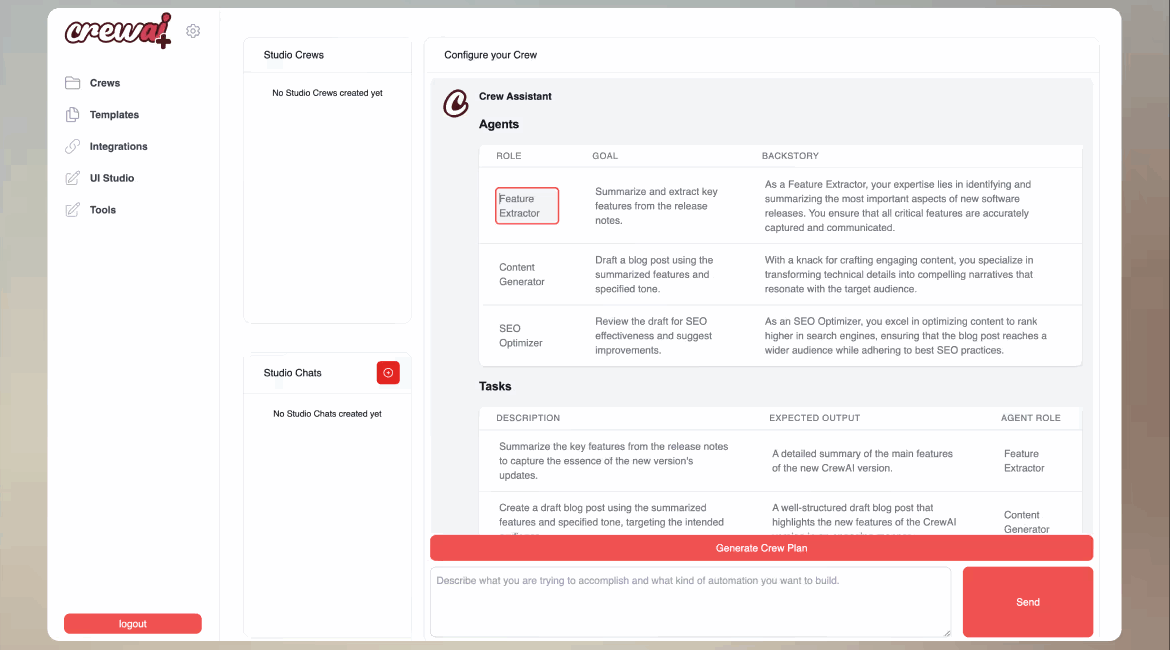

CrewAI

When to use: CrewAI is best for creating collaborative, role-based teams of specialized agents to autonomously work on structured tasks like research or content creation.

CrewAI is a lightweight Python framework built from scratch (independent of LangChain) for creating role-based AI agent teams. It enables developers to build "crews" of specialized agents that collaborate autonomously on complex tasks.

- Role-based architecture: define agents with specific roles, goals, backstories, and expertise areas

- Dual framework approach: CrewAI Crews for autonomous collaboration, CrewAI Flows for event-driven control

- Task management: sequential and parallel task execution with clear objectives

- Built-in tools: flexible tool integration and custom API connections

- Process control: workflow management system defining collaboration patterns

- Enterprise features: templates, access controls, and deployment tools available

Pricing

- Paid plans starting from $99/month (plans are visible only after account creation)

- Limited free plan with 50 executions/month and 1 live deployed crew

- Enterprise: custom pricing with advanced features

OpenAI AgentKit

When to use: visual workflow building with OpenAI ecosystem integration – suitable for teams wanting a drag-and-drop builder, with managed hosting or Agents SDK code export.

OpenAI AgentKit is a platform for developing, deploying and optimizing agent workflows. It combines visual Agent Builder, managed deployment options (ChatKit), and code export capabilities (Agents SDK). While offering a polished experience, it represents significant vendor dependency compared to open alternatives.

- Visual Agent Builder: drag-and-drop canvas for agentic workflows with basic templates, live preview, and versioning

- Core primitives: agents, guardrails, and sessions with automatic tool calling and conversation management

- Connector registry: centralized admin panel that includes pre-built connectors (like Dropbox, Google Drive, Sharepoint, and Microsoft Teams), as well as third-party MCPs

- Deployment options: ChatKit managed hosting, embeddable chat widgets, or exported SDK code for self-hosting

- Built-in evaluation: datasets, trace grading, automated prompt optimization, and performance measurement

- OpenAI-native integration: deep coupling with GPT models, embeddings, and OpenAI’s evaluation tools. However, without any support for Anthropic Claude, local models via Ollama, or other providers

Pricing

- Platform access: free when creating agents (either visually with Agent Builder or via Agents SDK)

- Usage costs: based on underlying OpenAI API consumption (GPT models, embeddings, function calls)

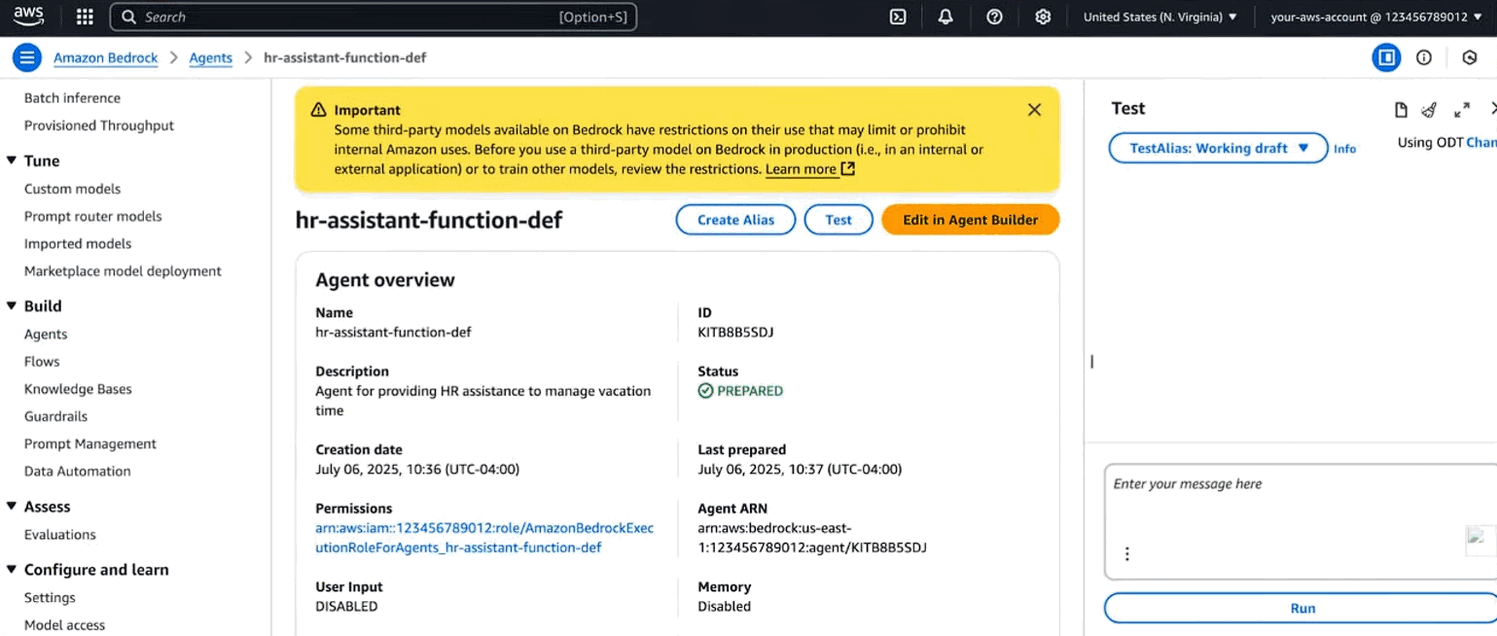

Amazon Bedrock Agents

When to use: Amazon Bedrock Agents is the choice for fully managed, enterprise-scale agent deployment within the AWS ecosystem, offering automatic scaling and security.

Amazon Bedrock Agents is a fully managed service for building and deploying autonomous agents that integrate with AWS services and external APIs. It provides orchestration designed for large-scale operations, with automatic prompt engineering, memory management, and security.

- Multi-agent collaboration: supervisor-based architecture with specialized agent coordination.

- Fully managed: no infrastructure management, automatic scaling, built-in security.

- Foundation model choice: support for multiple models including Amazon Nova and GPT-oss.

- Action groups: OpenAPI schema integration for external system connectivity.

- Knowledge base integration: built-in RAG capabilities with vector database support.

- Advanced prompts: customizable prompt templates for each orchestration step.

- CloudFormation support: infrastructure as Code deployment and team templates.

- Observability: built-in monitoring, tracing, and debugging console

Pricing

- On-demand: pay per token usage, varies by model (e.g., Nova Micro: $0.000035/1k input tokens)

- Provisioned throughput: discounted rates with 1-month or 6-month commitments

- Batch mode: reduced costs for large-scale processing

- Additional services: knowledge bases, guardrails priced separately

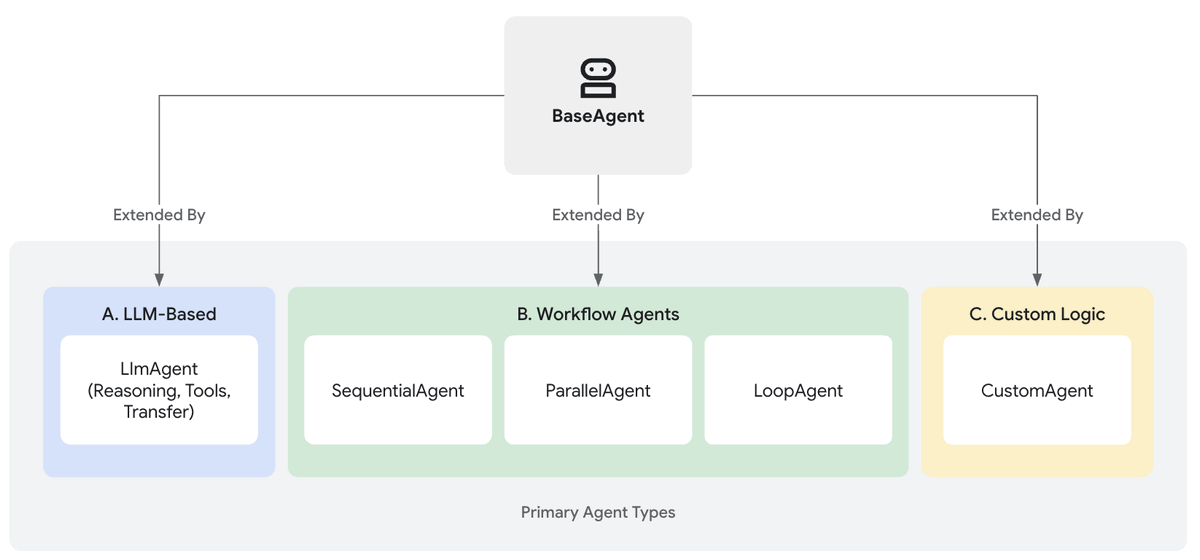

Google Agent Development Kit

When to use: ADK is a code-first Python framework for building production-ready agents that require deep integration with Google Cloud and Vertex AI.

Google's Agent Development Kit (ADK) is a code-first Python framework for building multi-agent applications intended for real-world deployment and integrated with Google Cloud services. It provides flexible orchestration patterns and deep integration with Gemini models and Vertex AI.

Flexible orchestration: workflow agents (Sequential, Parallel, Loop) and LLM-driven dynamic routing.

- Google Cloud integration: native Vertex AI deployment, Gemini model access, Cloud services integration

- Multi-agent systems: support for complex agent coordination and collaboration patterns.

- Production-ready: deployment options suitable for business applications, with scaling capabilities.

- Rich tooling: comprehensive development tools (Google search, code execution, automatic tool schema generation) and debugging capabilities.

- Agent-to-Agent protocol: support for A2A protocol for inter-agent communication.

Pricing

- ADK framework: free to use (open source Python framework)

- Usage costs: based on underlying Google Cloud services (Vertex AI, Gemini models) consumption

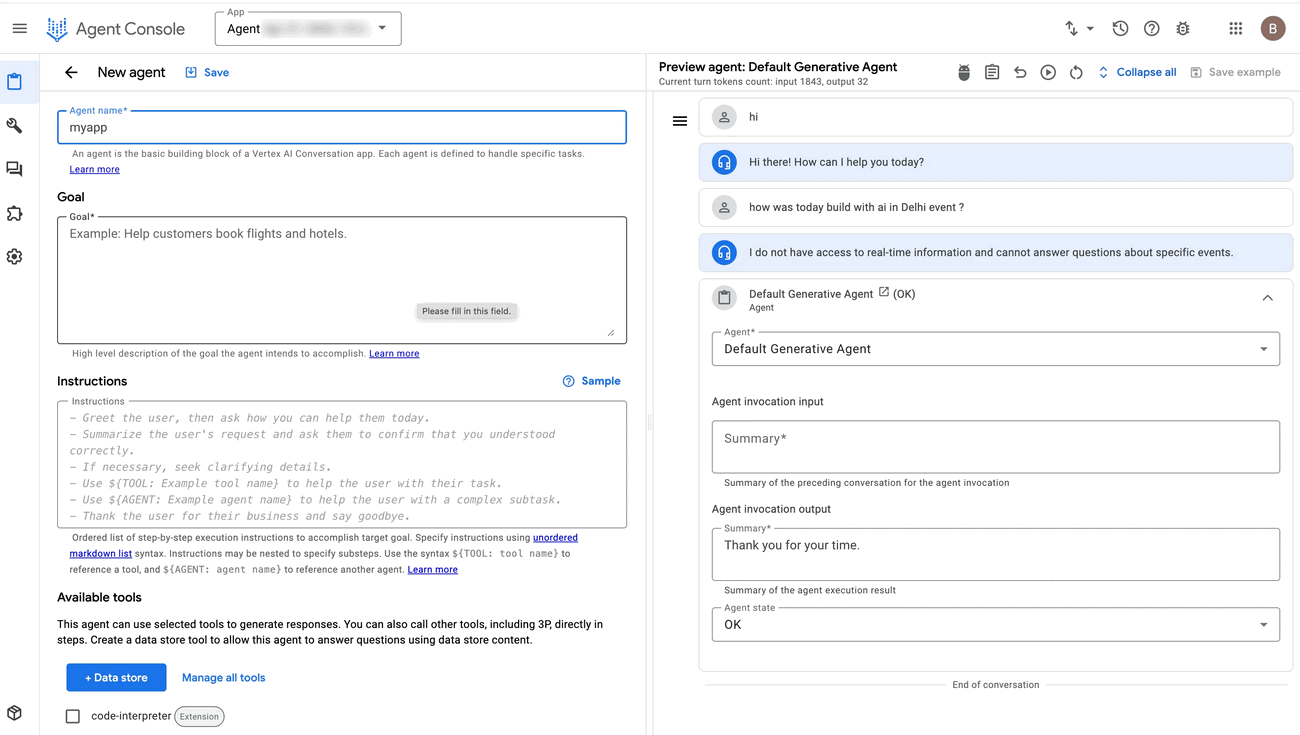

Vertex AI Agent Builder

When to use: use this managed, no-code platform to quickly build and deploy conversational agents that are integrated with your enterprise data on Google Cloud.

Vertex AI Agent Builder is Google's platform for creating AI agents integrated with enterprise data sources. It provides a visual interface for assembling no-code agents that can access knowledge bases and connect to business systems.

- No-code development: visual interface for assembling conversational agents (based on the agents pre-built with ADK)

- Conversational design: advanced dialogue management and context handling

- Enterprise data integration: connect to databases, documents, and business systems

- Built-in RAG: automatic retrieval-augmented generation from knowledge sources

- Multi-framework support: in addition to ADK, developers can deploy agents built with popular open-source frameworks like LangChain, LangGraph, AG2 or Crew.ai

- Google Cloud integration: native access to Google services and APIs

Pricing

- Usage-based: pay per conversation and API calls

- Integration costs: additional charges for data source connections and storage

- Enterprise edition: premium pricing for advanced features

Microsoft Semantic Kernel Agent Framework

When to use: this framework is designed for multi-language enterprise development (C#, Python, Java) within the Microsoft ecosystem, featuring deep Azure integration.

Semantic Kernel’s Agent Framework is Microsoft's multi-language orchestration platform supporting .NET, Python, and Java for building production-grade agent systems. It provides skill-based planning and deep Azure AI services integration.

- Multi-language support: native C#, Python, and Java implementations

- Agent orchestration: hierarchical and collaborative agent patterns with state management

- Azure AI integration: deep integration with Azure OpenAI, Cognitive Services

- Built for corporate use: includes production deployment patterns with monitoring and scaling

- Memory management: advanced conversation and context management

Pricing

- Open source: free framework (MIT license)

- Usage costs: based on underlying Azure AI services consumptio

Azure AI Foundry Agent Service

When to use: opt for this fully managed service to deploy agents on Azure with enterprise-grade security, compliance, and native Microsoft 365 integration.

Azure AI Foundry Agent Service is Microsoft's fully managed platform for deploying and scaling agent applications built with Semantic Kernel or other frameworks. It provides enterprise-grade infrastructure with built-in security and compliance features.

- Fully managed: no infrastructure management required.

- Advanced security and compliance: built-in data governance and access controls tailored for corporate needs.

- Microsoft 365 integration: native integration with Office, Teams, and SharePoint.

- Auto-scaling: automatic resource allocation and performance optimization.

- Monitoring & analytics: built-in observability and performance tracking.

- Multi-tenant support: isolated environments for different organizational units.

Pricing

- Usage-based: pay per agent execution and resource consumption

- Enterprise tiers: volume discounts and dedicated support via Azure partners

- Integration costs: additional charges for premium Microsoft 365 integrations

Benefits of using AI agent orchestration frameworks

Multi-agent AI orchestration has several advantages over single-agent approaches:

- Task specialization reduces complexity. Instead of one agent handling everything poorly, specialized agents excel at specific functions. Your data analysis agent focuses solely on processing reports while your scheduling agent handles calendar management - each optimized for their domain.

- Cost efficiency at scale. You can use smaller, cheaper models for specialized tasks instead of requiring the most capable (and expensive) model for everything.

- Better scalability and performance. When workload increases, you can scale individual agent types based on demand rather than upgrading entire systems. Parallel agent execution also reduces overall processing time compared to sequential single-agent workflows.

- Easier maintenance and updates. Modifying one agent's behavior doesn't require rebuilding your entire system. You can update, test, and deploy changes to specific agents without disrupting the whole workflow.

These benefits compound as systems grow more complex beyond simple chatbots.

Wrap up

We've explored 11 AI agent orchestration frameworks across three categories and came to this conclusion.

- For visual agent building: n8n is the clear leader among low-code options. While Zapier Agents offer simplicity, they lack the configurability needed for complex workflows. Flowise provides excellent LangChain integration but misses the traditional automation layer that business workflows require. OpenAI's Agent Builder is a brand new product that only supports LLMs from OpenAI.

- For developer-focused projects: the SDK landscape offers multiple approaches beyond the LangChain ecosystem. CrewAI and OpenAI Agents SDK provide framework-agnostic solutions, while LangGraph gives you precise control within the LangChain ecosystem.

However, Microsoft Semantic Kernel and Google ADK, despite being open source, are designed and optimized primarily for their respective cloud ecosystems - keep this in mind if you need true vendor flexibility.

- For enterprise deployment: major cloud providers have strategically separated their open-source SDKs from managed infrastructure services.

While managed platforms like Amazon Bedrock Agents, Vertex AI Agent Builder, and Azure AI Agent Service offer compelling features and reduced operational overhead, they create vendor lock-in risks. Evaluate these carefully against your long-term flexibility requirements and multi-cloud strategies.

What's next?

Ready to build your own AI agent orchestration system? Here are your next steps:

- Learn what AI agents are and how they work - from theory to practical deployment patterns.

- Follow our step-by-step guide to building AI agents with free workflow templates and build your first agent.

- Explore 9 AI agent frameworks and why developers choose n8n.

- Read the n8n scalability benchmark to understand performance limits and scaling strategies for production workflows.

Finally, try n8n's AI agent capabilities for free and explore community AI workflows to see production-ready orchestration systems in action.