Let's face it: AI has evolved beyond being a trendy term. It's not just about adding a chatbot to your website, either.

Implementing AI involves building complex, intelligent workflows that can fundamentally transform your operations. This is where many businesses struggle, especially when it comes to managing data, deploying models, and orchestrating workflows.

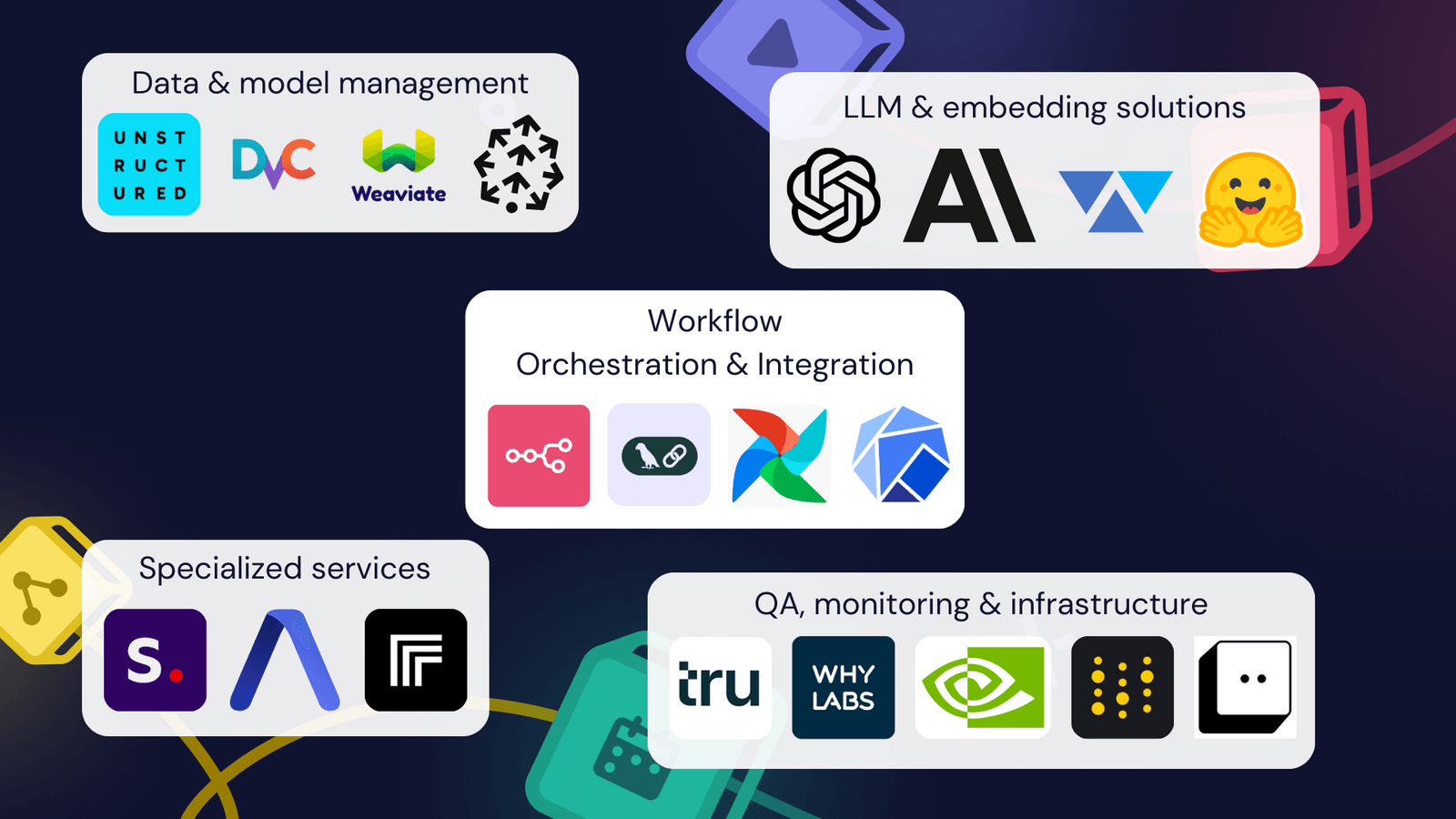

To help you navigate these challenges, we've created a list of 20 enterprise-grade AI tools organized into five key categories:

- Data and model management: handling the backbone of AI operations, ensuring data integrity and model versioning.

- LLM and embedding solutions: accessing and integrating with large language models.

- Specialized AI services: APIs and services that offer specific AI capabilities, from image generation to speech recognition.

- Quality assurance, monitoring and infrastructure: ensuring AI systems perform reliably and efficiently at scale.

- Workflow orchestration and integration: tools that tie everything together, allowing for automation and management of complex AI workflows.

In this article, we’ll also cover how these AI solutions integrate into complex workflows using n8n.

Here’s a sneak peek of what you can build with AI tools:

Let’s get into it!

What AI is used in business?

AI in business isn't a universal solution but a flexible tool that can be adapted to different needs. Let's take a look at the main types of AI used in large companies:

1. Natural language processing (NLP): powers chatbots, tools for sentiment analysis and document processing.

2. Expert systems: have been widely used in complex decision-making scenarios such as medical diagnosis or financial planning. These systems emulate human expertise in specific domains.

3. Machine learning (ML): these algorithms learn from data to make predictions or decisions. Some common applications are within predictive analytics, fraud detection and personalization engines.

4. Computer vision: used in quality control, security systems and autonomous vehicles. It allows machines to interpret and act on visual information.

5. Robotic process automation (RPA): automates repetitive tasks across applications. It's like a digital workforce handling routine operations.

6. Generative AI: the new technology that supports content creation, code generation and creative tasks. Tools like GPT-4, Stable diffusion, Suno and Sora are all in this broad GenAI league.

Each AI type complements business processes, from customer service to product development, operations to strategic planning. The key is identifying where AI can add the most value in your business context.

What is the best AI tool for business?

In this section, we'll introduce you to the best modern AI tools, focusing on those that can be integrated into complex, enterprise-grade workflows.

While not every AI project requires AI tools from every category below, this classification helps understand the elements needed to build and maintain AI systems in enterprise environments.

Data and model management

Unstructured

Use cases: Document preprocessing for Retrieval Augmented Generation (RAG) and fine-tuning

Overview: unstructured.io provides tools that convert large volumes of unstructured documents into structured pieces of data suitable for AI applications. The company offers three main products: a serverless API for fast document conversion, a no-code enterprise platform for RAG-ready data preparation and an open-source library for prototyping.

Key features:

- Automated document parsing for various formats (PDFs, images, emails, etc.).

- Modular functions and connectors for flexible data ingestion and pre-processing.

- Open-source library with Docker support for easy integration and development.

Pricing:

- Serverless API: usage-based

- Enterprise platform: upon request

- Open-source library: free

DVC

Use cases: Version control for machine learning projects, data and model management, experiment tracking

Overview: DVC is an open-source version control system for machine learning projects. It enables data scientists and ML engineers to version data, models and experiments alongside their code. This approach provides reproducibility and collaboration features similar to Git, but is optimized for large files and complex ML workflows.

Key features:

- Data and model versioning: store and version large files and directories in cloud storage while keeping metadata in Git.

- Reproducible ML pipelines: define and version ML workflows, ensuring reproducibility across different environments.

- Experiment tracking: track, compare and manage ML experiments directly in your local Git repository.

Pricing:

- Open-source: the core version is free

- DVC studio: free for individuals and small teams, enterprise plan available on request

Weaviate

Use cases: AI-native database for intuitive applications, hybrid search, RAG and generative feedback loops

Overview: Weaviate is an open-source vector database designed to support AI-native applications. It provides a flexible, reliable foundation for developers and teams of all sizes on their AI journey.

Key features:

- Hybrid search: combines vector and keyword techniques for contextual, precise results across all data modalities.

- RAG (Retrieval-Augmented Generation): enables building trustworthy generative AI applications using proprietary data, prioritizing privacy and security.

- Generative feedback loops: enriches datasets with AI-generated answers, improving personalization and reducing manual data cleaning efforts.

Pricing:

- Open-source vector database: free

- Weaviate serverless cloud starts from $25/month and also offers usage-based pricing

- Weaviate enterprise cloud offers annual contracts, pricing on request

Pinecone

Use cases: Vector database for AI applications, hybrid search and retrieval-augmented generation (RAG)

Overview: Pinecone offers a serverless vector database solution, positioning itself as scalable and managed alternative to open-source options. This fully managed, serverless option may appeal to teams looking for a more hands-off approach to scaling and maintenance. Pinecone's focus on ease of use and integration with popular AI models could make it attractive for rapid development and deployment of AI applications.

Key features:

- Serverless architecture: with no configuration or scaling required, developers can start building quickly.

- Hybrid search: combines vector search with keyword boosting for optimized results.

- Real-time index updates: ensures fresh, relevant results as data changes and grows.

Pricing:

- Free Starter plan: 1 project, 5 serverless indexes, up to 2 GB storage

- Pay-as-you-go pricing for higher tiers based on usage

- Enterprise plans available for large-scale deployments

LLM and embedding solutions

Now that we’ve covered AI tools for preparing and storing the data, let's take a look at the main providers for creating embeddings and accessing LLMs.

OpenAI API

Use cases: Natural language processing, content generation, code completion and multimodal AI applications

Overview: OpenAI API provides access to the most advanced language models available. It's known for its cutting-edge capabilities and frequent updates, making it the first choice for many companies looking to implement state-of-the-art AI solutions. While OpenAI offers unparalleled performance, it’s more expensive and offers less control over the underlying technology, which may be a consideration for some enterprises.

Key features:

- GPT-4o and Turbo models: powerful language models for a wide range of tasks.

- Multimodal capabilities:

- DALL-E 3 for image generation

- GPT-4 Vision for image understanding and analysis.

- Audio processing:

- Whisper API for robust speech-to-text transcription

- Text-to-speech (TTS) for natural-sounding speech synthesis.

- Embeddings: generate vector representations of text for semantic search and analysis.

- Assistants API: building custom AI assistants with specific knowledge and capabilities.

- Fine-tuning: adaptation of models to proprietary data to improve performance on specific tasks.

Pricing:

- Pay-per-use pricing for each of the models via APIs

Anthropic

Use cases: Conversational AI, content generation, complex reasoning tasks and multimodal interactions

Overview: Anthropic offers several Claude models that are known for their strong performance in reasoning and analysis tasks. While Anthropic is a leader in text generation, it lacks certain features such as embeddings or audio processing features.

Key features:

- Advanced language models: the Claude 3 model family and Claude 3.5 Sonnet in particular, provide powerful natural language processing and generation in multiple languages.

- Extensive context window: can process significantly more information (up to 200,000 tokens) in a single conversation.

- Multimodal capabilities: image understanding and analysis through API.

- Function calling: enables more structured interactions and integration with external tools and databases.

Pricing:

- Pricing based on usage

LLMware (by AI Bloks)

Use cases: enterprise RAG pipelines, small specialized language models, custom AI model development, and multi-model agent workflows

Overview: llmware.ai offers a different approach to building LLM-based applications with small, specialized models. This enables private deployment, secure integration with enterprise knowledge sources and cost-effective tuning to specific business processes. Unlike larger models, llmware's solutions are designed to run efficiently on CPUs, making them more accessible and cost-effective for repetitive and well-defined scenarios.

Key features:

- Small specialized models (or SLIMs – Structured Language Instruction Models): over 50 fine-tuned models (1-7B parameters) optimized for specific tasks, including RAG, classification and function calling.

- CPU-friendly: models optimized to run on CPUs, reducing infrastructure costs.

- RAG pipeline: integrated components for connecting knowledge sources with generative AI models.

- Custom model training: services for fine-tuning models to specific domains and use cases.

- Open-Source: core functionality and models available as open-source.

Pricing:

- Open-source version available for free

- Professional and enterprise plans come with custom pricing

Hugging Face

Use cases: Model hosting, fine-tuning, deployment of open-source AI models

Overview: Hugging Face is a community-driven platform that hosts a vast array of open-source models. It has evolved into a comprehensive ecosystem for AI development, providing tools for the entire machine-learning lifecycle. While it started as a hub for open-source models, Hugging Face now offers enterprise-grade solutions for organizations looking to scale their AI initiatives securely and efficiently.

Key features:

- Model Hub: access to thousands of pre-trained or fine-tuned models.

- Transformers library: powerful tools for working with state-of-the-art models.

- Spaces: easy deployment of ML apps.

- Enterprise Hub: advanced features for large-scale AI development.

- Inference endpoints: deploy models on a fully managed infrastructure.

- Custom model training: services for fine-tuning models to specific domains.

Pricing:

- Free tier: unlimited public models, datasets and Spaces hosting

- Pro Account: $9/month for advanced features like ZeroGPU for Spaces

- Enterprise Hub: starting at $20/user/month with custom pricing for large organizations

- Inference endpoints: hourly-based pricing

Specialized AI services

Many businesses require more specialized AI functions. This is where dedicated AI services come into play. These tools offer specific, highly refined AI capabilities that can be seamlessly integrated into your workflows.

Stability AI

Use cases: Image generation, video generation, language models and 3D content creation

Overview: Stability AI offers several AI tools for various creative tasks. The platform is primarily known for Stable Diffusion, a powerful open-source image generation model. The company offers both API access for developers and user-friendly interfaces like StableStudio for end-users. Stability AI's offering spans across multiple AI domains, making it a versatile choice for businesses looking to incorporate AI into their creative workflows.

Key features:

- Diverse AI services: provide tools for generating text, images, videos and 3D content.

- Stable Diffusion integration: provides access to various versions of the popular Stable Diffusion model, including the advanced Stable Diffusion 3.

- Open-source friendly: offers StableStudio, an open-source version of their DreamStudio interface, allowing for customization and community contributions.

Pricing:

- Credit-based system: $10 per 1,000 credits

- 25 free credits for new users

- Enterprise plans available for large-scale usage

AssemblyAI

Use cases: Speech-to-text transcription, audio intelligence, voice data analysis

Overview: AssemblyAI offers several advanced speech AI models, including highly accurate speech-to-text transcription, speaker detection, sentiment analysis and more. Its API is designed for easy integration, making it an attractive option for developers looking to implement voice data analysis into their applications quickly. With their latest Universal-1 model trained on 12.5 million hours of multilingual audio data, AssemblyAI is positioning itself as a leader in speech recognition technology.

Key features:

- High accuracy speech-to-text: >92.5% accuracy with support for over 99 languages.

- Real-time streaming: low latency (<600ms) for synchronous audio stream transcription.

- Audio intelligence: includes features such as speaker diarization, sentiment analysis, PII redaction and integration with Large Language Models (LeMUR).

Pricing:

- Free tier: up to 100 hours of audio transcription

- Pay-as-you-go pricing

- Custom plans: available for large-scale deployments with volume discounts

Replicate

Use cases: Running and fine-tuning open-source AI models, deploying custom models at scale

Overview: Replicate positions itself as a platform for deploying and executing AI models that bridges the gap between researchers and creators. Unlike more research-oriented platforms such as Hugging Face, Replicate is aimed at a broader audience, including developers and creative professionals. It offers a wide array of models with concrete usage examples, from text and image generation to video and music creation, making it a one-stop shop for various AI applications.

Key features:

- Extensive model library: hosts thousands of open-source models, surpassing the specialized offerings of Stability AI and AssemblyAI.

- One-line deployment: simplifies the execution of AI models with a single line of code and lowers the barrier for integration.

- Custom model deployment: allows users to deploy their own models with Cog, Replicate's open-source packaging tool.

Pricing:

- Pay-as-you-go model: billing based on compute time used

- No upfront costs or idle charges for public models

- Free tier available for new users

Quality assurance, monitoring and infrastructure

As AI systems become more integral to business operations, ensuring their reliability, performance, and scalability becomes crucial. This section covers tools that help you maintain the quality and efficiency of your AI infrastructure, from monitoring model performance to managing GPU resources.

TruEra

Use cases: AI observability, LLM evaluation and monitoring, predictive AI quality management

Overview: TruEra offers an observability platform that covers the entire AI lifecycle, from development to production. It's particularly notable for its advanced capabilities in LLM evaluation and monitoring as well as its robust predictive AI quality management features. TruEra's solutions are designed to help enterprises build, deploy and maintain high-quality AI models and LLM applications at scale.

Key features:

- Enterprise-class scalability: ability to monitor hundreds of thousands of events per second, accommodating even the largest LLM models.

- Full lifecycle AI quality management: analyzes multiple aspects of model quality, including accuracy, generalization, conceptual soundness, stability, reliability and fairness.

- LLM observability: offers comprehensive tools for evaluating and monitoring LLM applications, including feedback features to optimize performance and minimize risks like hallucinations.

Pricing:

- TruLens, an open-source library for LLM app testing and tracking, is available for free

- Pricing information is not publicly available as TruEra recently joined Snowflake

WhyLabs

Use cases: AI observability, security and optimization for predictive ML and generative AI applications

Overview: WhyLabs offers an AI control center that goes beyond traditional observability by providing tools to observe, secure and optimize AI applications. The platform addresses the challenges posed by both predictive ML models and large language models (LLMs), with a focus on real-time security and control for generative AI applications.

Key features:

- Real-time AI security: implements guardrails in accordance with MITRE ATLAS and LLM OWASP standards to prevent issues like toxic language, prompt injections and hallucinations in real-time.

- Comprehensive observability: monitors data quality, model performance and drift throughout the entire AI lifecycle, from development to production.

- AI optimization tools: provide feedback mechanisms and analytics for continuous improvement of AI models and applications, including support for RLHF in generative models.

Pricing:

- Free plan available for basic observability features

- 14-day free trial for advanced security features

- Enterprise pricing available upon request

NVIDIA

Use cases: AI infrastructure, GPU acceleration for deep learning, high-performance computing

Overview: Speaking of AI infrastructure, NVIDIA is one of the few companies that actually makes hardware for AI computing. It’s a leader in both chip and software manufacturing, providing enterprise-level solutions for building and scaling AI infrastructure. NVIDIA’s enterprise offerings range from powerful GPUs to complete AI supercomputers, supported by a suite of software tools for AI development and deployment.

Key features:

- DGX systems: AI supercomputers that integrate GPUs, networking and software for Turnkey AI infrastructure.

- NVIDIA AI enterprise: a comprehensive software suite for developing and deploying AI applications in the enterprise.

- NVIDIA base command platform: a cloud-based development hub for AI and data science workflows.

Pricing:

- Hardware solutions, such as DGX systems, start from the tens of thousands of dollars

- NVIDIA AI enterprise is subscription-based, with pricing available upon request

- Cloud-based solutions, such as Base Command Platform, offer pay-as-you-go options

- Enterprise-specific pricing is available through consultation

Weights & Biases

Use cases: ML experiment tracking, hyperparameter optimization, model registry and LLM application development

Overview: Weights & Biases is an AI developer platform that helps teams build, fine-tune and monitor machine learning models from experimentation to production.

Key features:

- Experiment tracking: automatically log and visualize every detail of your ML experiments, including metrics, hyperparameters and model artifacts.

- Sweeps: efficiently optimize hyperparameters with automated hyperparameter search.

- Weave: confidently develop and evaluate GenAI applications, including tools for debugging LLM inputs/outputs and rigorous model evaluations.

Pricing:

- Free tier with limited storage and users, community support only

- Teams: $50/month per user + usage

- Enterprise: custom plans upon request

BentoML

Use cases: Model serving, AI application development, LLM inference, image generation, speech recognition

Overview: BentoML is a unified open-source framework for building performant and scalable AI applications with Python. It simplifies the process of transforming ML models into production-ready endpoints and offers a set of tools for serving optimization, model packaging and production deployment.

Key features:

- Unified distribution format: simplifies collaboration through a file archive known as Bento, in which all necessary dependencies are packaged.

- Flexible model support: compatible with various AI models and frameworks, including pre-trained models from Hugging Face and custom-trained models.

- Inference optimization: offers high-performance delivery with techniques like model inference parallelization and adaptive batching.

Pricing:

- BentoML itself is open-source and free to use

- BentoCloud: Pay-as-you-go computing pricing

- Pro and Enterprise: customized pricing with additional features and support

Workflow orchestration and integration

With all the powerful AI tools at your disposal, the next challenge is integrating them into efficient workflows. This is where you’d need workflow orchestration. These platforms allow you to connect various AI services with everyday software, automate complex processes and manage your AI pipelines at scale.

n8n

Use cases: Workflow automation, AI integration, data processing, API orchestration

Overview: n8n – is a source-available low-code automation platform particularly well-suited for enterprise environments.

Unlike many low-code tools, n8n offers on-premises deployment options, making it ideal for organizations with strict data security requirements. Its intuitive visual interface makes it accessible to beginners, while its extensibility and custom code support appeal to advanced users and developers.

Once your tech-savvy team has invested in deploying AI models and making them available via custom endpoints, n8n will take things further. From now on, even citizen developers can create efficient workflows through new endpoints.

Key features:

- Advanced AI nodes: pre-built integrations with popular AI services and LLMs, a collection of nodes that implement LangChain's functionality.

- Flexible deployment: cloud or on-premises to meet different security and enterprise compliance requirements.

- Scalability: handle high-volume, complex workflows using queues and horizontal scaling.

- Extensibility: custom nodes, npm-libraries, system tools or code (JS and Python).

- Enterprise-grade security: role-based access control (RBAC), audit logs, encryption at rest and in transit to meet stringent enterprise security requirements.

- AI workflow templates: pre-built templates for common AI use cases.

- Version control and collaboration: Git integration for workflow versioning and sharing workflows and credentials among team members.

- Automated error handling: error handling and retry mechanisms to ensure the robustness of AI-driven workflows.

Pricing:

- Free self-hosted community edition

- n8n Cloud starts from $20/month

- Enterprise: customized pricing upon request

LangChain

Use cases: Building complex AI applications, chaining multiple LLM operations

Overview: LangChain is a framework for developing applications powered by language models. Although n8n already offers a low-code implementation of the JavaScript version of LangChain, experienced developers may prefer to use the original library for more granular control and customization of their AI workflows.

Key features:

- Flexible LLM integration.

- Chainable operations.

- Memory management for conversational AI.

Pricing:

- Open-source and free to use

- Paid LangSmith and LangGraph products

Airflow

Use cases: Data pipeline orchestration, batch processing

Overview: Apache Airflow is an open-source platform that allows you to programmatically create, schedule and monitor workflows. While n8n excels in real-time, event-driven automation with a low-code interface, Airflow may be the preferred solution for data engineers who code their workflows and need to manage complex data pipelines.

Key features:

- DAG-based (Directed Acyclic Graph) workflows.

- Extensive operator library.

- Scalable architecture.

Pricing:

- Open-source and free to use

- Various vendors offer paid managed installations

Kubeflow

Use cases: ML workflow deployment on Kubernetes

Overview: Kubeflow is an open-source machine learning platform designed for deploying ML workflows on Kubernetes. Unlike n8n, which is focused on general workflow automation with AI capabilities, Kubeflow is specifically designed for ML operations (MLOps) in Kubernetes environments. Organizations that are seriously investing in Kubernetes and need specialized ML pipeline management may prefer Kubeflow over other workflow orchestration tools.

Key features:

- End-to-end ML workflow management.

- Integration with popular ML frameworks.

- Kubernetes-native design.

Pricing:

- Open-source and free-to-use

How to use AI tools for business?

Starting to use AI tools for business involves a strategic approach to ensure successful integration and maximum benefits.

Begin by identifying specific areas where AI can add value, such as customer service, marketing, operations, finance, or human resources.

Next, research or select AI tools mentioned above that align with your business needs and budget. Ensure you have clean, well-organized data to feed into AI systems, as the quality of your data will directly impact AI performance.

Start with small pilot projects to test the effectiveness of AI tools before full-scale implementation. Finally, train your employees to effectively use these tools, continuously monitor AI performance, and make necessary adjustments to optimize outcomes.

For a pilot solution, n8n provides a versatile and user-friendly platform for general workflow automation with strong AI integration capabilities. This makes a wide range of enterprise use cases possible:

Get access to LLMs from n8n

With several dedicated nodes, you can access Large Language Models without any coding. Take a look at the OpenAI node. Here are just a few workflow examples of how to use it:

Most other LLM providers are accessible via a custom HTTP request node. With a little configuration, you can access almost any other service imaginable, without waiting until a ready-made node is available.

Work with vector databases in n8n

If you need access to vector store databases, a range of LangChain nodes are here to help. Use them to seamlessly integrate databases such as Zep, Supabase, Pinecone and Qdrant.

If you’re looking for something simpler for fast prototyping, you should take a look at the Window Buffer Memory node. It stores embeddings and chunks of data directly on your n8n instance. Zero configuration time!

Create autonomous AI-agents

For advanced use cases, you can even create LLM-powered AI-agents with n8n. Read this article to find out more.

Of course, this is not a complete list of use cases. Here are a few more ideas:

- Integrate traditional tools with modern AI models via n8n to optimize business processes;

- Use n8n for as a high-level orchestration tool that interacts with all specialized AI platforms while you develop and rollout new AI models.

Wrap Up

In this article, we've looked at 20 powerful AI tools in five categories:

- data & model management;

- LLM & embedding solutions;

- specialized AI services;

- quality assurance & infrastructure;

- workflow orchestration.

While this classification is not official, it shows that the real power of AI in business lies in combining tools from several categories.

This is where n8n shines, offering enterprises a platform for orchestrating these AI tools alongside existing business processes.

What’s next?

It's time to put this knowledge into action with n8n. Start by exploring our AI-powered workflow templates.

For a deeper dive into AI automation, check out our past articles on LLM-powered AI Agents or 11 best coding assistants.

For a more casual reading, refer to our guide on AI chatbots (and create your own in n8n without programming!).

Whether you're new to AI or looking to scale your existing solutions, n8n provides the flexibility and power to bring your AI workflows to life.