CSV importing to Google Sheets is an invaluable asset for technical professionals seeking efficient data management solutions.

It allows for automating data pipelines, performing ETL (Extract, Transform, Load) processes, conducting in-depth data analysis, and implementing custom scripting.

The challenge, however, lies in the manual effort CSV to Google Sheets import often requires, especially when dealing with large or multiple files, or diverse external systems.

In this step-by-step tutorial, we present 3 methods to import CSV into Google Sheets depending on the complexity of import:

- For robust import, we'll kick off with the n8n method, focusing on automatic CSV import into Google Sheets. We'll explore the process of importing multiple CSV files and importing CSV from a URL in Google Sheets.

- For small-scale one-time tasks, we'll show the manual import of a CSV into Google Sheets using Google's own interface.

- To top it all off, we'll demonstrate how to import a CSV file into Google Sheets via a Python script for those who still prefer to program everything from scratch.

So, let’s roll up our sleeves and start automating!

1. Import CSV to Google Sheets automatically via n8n

Before diving into the process of importing CSV files, we first need to create some. For this purpose, we've prepared a simple workflow using n8n.

Our mock-up workflow creates multiple CSV files with lists of users and their respective subscription statuses. There are several approaches to creating simulated data, we used GPT-4 API with a one-shot prompting technique:

In real-world situations, you may receive similar CSV files from a Content Management System (CMS) or a payment provider. Loading these into Google Sheets can help your team members to analyze and prepare reports. For example, reports on monthly active subscribers.

Method 1.1. How do I import multiple CSV files into Google Sheets?

Let's now use the CSV files from the mock-up workflow and import multiple CSV files with subscribers to Google Sheets.

This method is useful when the external system doesn’t have a direct connection to the Google Sheets. Automating CSV imports can save loads of time, facilitate data cleaning and pre-processing, and also prevent human errors.

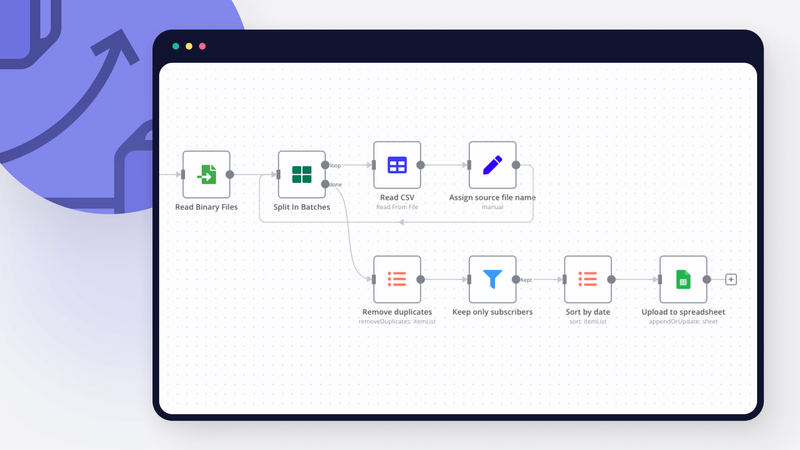

You can grab multiple files and perform pre-processing in a few steps:

- Start with the Read Binary Files node to read files from the host machine that runs n8n. The File Selector uses the wildcard

*.csv. This ensures that all CSV files from the./.n8n/folder are loaded. - Next, add the Split In Batches node, which processes one binary data at a time. Within this cycle, the Spreadsheet File node converts the CSV binary data into a JSON table.

- Use a Set node to add a new Source variable that contains the original CSV file name. To do this, simply add a new String variable and enter the expression:

{{ $('Split In Batches').item.binary.data.fileName }}. - Once the cycle is complete, the Done output of the Split In Batches node will contain records from all three files:

Let's now use n8n's capabilities to clean and filter data. This functionality is important during the ETL process.

- Continue building the workflow by using the ItemLists node to remove duplicates. Select a corresponding item in the Operation drop-down list. Pick Selected Fields in the Compare drop-down and enter

user_name. In our mock example, there are some matching users marked in yellow, so the number of output items is slightly smaller. - Use a Filter node to exclude non-subscribers. Add a new String condition and enter

{{ $json.subscribed }}as Value 1. Select the Equal Operation and enter TRUE for Value 2. Execute the node to keep subscriber records and discard the rest.

- Before we upload the table to Google Sheets, let’s sort the users by their subscription date. Add another ItemLists node. Select Sort Operation, the sort type should be Simple. Enter date_subscribed into Fields To Sort By. Ascending Order will be selected automatically.

- Once the pre-processing is complete, you can import the subscribers into Google Sheets. First, create a new spreadsheet document, add a new empty sheet and enter table headers on the first row:

| user_name | user_email | subscribed | date_subscribed | Source |

|---|

- Coming back to n8n, add a Google Sheets node, provide credentials and the spreadsheet URL. Choose Append or Update Row Operation and select a sheet name from the drop-down list. If the JSON keys match the spreadsheet columns exactly, the records can be mapped automatically.

Finally, hit Execute workflow to import multiple CSV files into Google Sheets.

Method 1.2. How do I import CSV from a URL into Google Sheets?

In this example, we`ll show how to automatically upload a CSV file from a URL.

This method is useful when sourcing data from public databases or when an external system can export to CSV but cannot update Google Sheets directly.

Many public data sources provide CSV datasets. We will use weekly data on COVID-19 testing in Europe. This CSV file is freely available and is updated regularly.

- First, add an HTTP request node and make a GET request to the following address: https://opendata.ecdc.europa.eu/covid19/testing/csv/data.csv. Since we expect a file in return, add a Response option and select File as the response format.

- After downloading the CSV file, add a Spreadsheet File node, choose Read From File Operation and CSV as the File Format. Also add an Option called Header Row and make sure it's activated.

- Next, let’s add an additional variable. The dataset is currently missing a field with unique values. Add a Set node and create a new string variable called unique_key with this expression as the Value:

{{ $json.country_code }}-{{ $json.year_week }}. This operation concatenates the country code with the year-week value to generate a variable with unique records.

- This COVID-19 table is quite long, and Google Sheets has API limitations. Although it's possible to add records via Split In Batches node with ample pauses, we will limit the number of records for this example. Add a new Filter node and configure it as shown in the screenshot. First, add a Boolean condition. In the Value 1 enter an expression:

{{ ['DE', 'AT', 'CH'].includes($json.country_code )}}

This JavaScript one-liner checks whether the country code matches any of the DACH countries (Germany, Austria or Switzerland). The second string condition checks if the year-week value starts with 2023. This filter successfully reduces the number of records from over 5000 to a few dozen.

- The final step is to import this CSV subset into Google Sheets. Add a new sheet to the Google spreadsheet document. In this empty sheet, provide only a unique column name (unique_key) in A1 cell. The Google Sheets node will do the rest during the initial import.

- Finally, create a new Google Sheets node in n8n (or copy-paste it from the previous example). Use the same Append or Update Row Operation and the same Document URL. Select a newly created sheet from the drop-down list and pick automatic Mapping Column Mode. You can also select the Cell Format approach (either strictly as in n8n or let Google decide) by adding the corresponding option.

After executing the workflow, you’ll see the following result:

That’s it! We've successfully automated the import of CSV files into Google Sheets without any coding via n8n. You can go even further and:

- Run this workflow on a regular (i.e. weekly) basis via Schedule trigger;

- Compare datasets from a previous run and send notifications about any unusual changes, thus preventing data quality issues and reducing manual labor;

- Import CSV into various destinations such as Slack, BigQuery and so on. You can even import one CSV file into several places in one go.

2. Import CSV files into Google Sheets using Google's own interface

Let’s now explore alternative methods to the one discussed earlier, starting with a manual import via the native Google Sheets interface. This interface is both powerful and very intuitive, allowing for simple import of the CSV files.

However, users need to perform all actions manually, such as pre-processing, data cleaning, formatting, etc. So the overall complexity quickly adds up.

There's also an automated way to accomplish this task by creating a plugin with Google App Script. Still, using App Script can be tedious and requires some training.

Method 2.1. From the Google Spreadsheet interface

This process is probably the most straightforward and familiar:

- First, create a new blank spreadsheet.

- Then, in the new window, select File → Import and choose the tab. You can either import a CSV from a file that already exists on Google Drive or upload a local file.

- Once you've uploaded one of the CSV files, a new window will appear:

- Here you can select the Import location: create a new spreadsheet, add a sheet to an existing file, append to the current sheet and several others. Google Sheets supports files with different separator types (i.e. tab, comma, custom symbol). By default, it tries to detect the separator symbol automatically.

- After you select the desired option, click Import data. In a few moments the data will be displayed:

Method 2.2. From the Google Drive interface

Another way to open a CSV in Google Sheets is via the Google Drive interface.

- In this case, upload a new CSV file to Drive. Right-click the file and then select Open with → Google Sheets.

A new spreadsheet will be created with the content of this CSV file.

- You can go even further and automate the conversion via the settings menu.

- Click on the ⚙️ in the upper right part of the Google Drive interface;

- In the General settings, scroll down to Uploads and activate the “Convert uploads to Google Docs editor format” tick box.

- Now every time you upload a file with a commonly supported format, it will be converted automatically. CSV files are converted to Google Sheets format. However, these conversions do not support complex logic. They merely convert the raw CSV files to Google Sheets format.

3. Import CSV to Google Sheets via Python

In this section, we'll explain how to import local CSV files into Google Sheets using a more popular Python language.

Python allows users to automatically import CSV files into Google Sheets. There are libraries that facilitate many ETL steps, such as data cleaning or importing CSV from a URL.

However, any complex logic requires substantial knowledge. With n8n, for example, even non-programmers can perform many steps themselves thanks to a variety of convenient nodes.

Step 1. Obtain Google Service Account Credentials

The process of acquiring credentials for your Python script involves several steps. That’s why we created a tutorial within the tutorial for this 🙂.

In essence, the steps are as follows:

- Access the Google Cloud Console;

- Create a new Project;

- Enable GoogleSheet APIs;

- Create credentials for a Service Account;

- Download a JSON file with API keys;

- Lastly, don't forget to share the Google Sheets document with the newly created Service Account.

Step 2. Write a Python Script

Once you've created a Service Account and have a JSON file with your API key, it's time to write a small Python script to upload a CSV file to Google Sheets.

First, make sure you have the necessary Python libraries installed:

pip install gspread

pip install oauth2clientThen, create a .py file with the following content:

import gspread

from oauth2client.service_account import ServiceAccountCredentials

scope = ["https://spreadsheets.google.com/feeds", 'https://www.googleapis.com/auth/spreadsheets',

"https://www.googleapis.com/auth/drive.file", "https://www.googleapis.com/auth/drive"]

credentials = ServiceAccountCredentials.from_json_keyfile_name('credentials.json', scope)

client = gspread.authorize(credentials)

spreadsheet = client.open('Import CSV examples')

with open('filename.csv', 'r') as file_obj:

content = file_obj.read()

client.import_csv(spreadsheet.id, data=content)This short script does four things:

- It prepares the Service Account credentials using the JSON file and several scopes for Google Sheets and Google API;

- Then it authorizes these credentials;

- Next, it opens the spreadsheet file (don't forget to share this Google Sheet with the Service Account);

- Finally, It uploads the content of the local CSV file to Google Sheets. By default, all existing sheets will be replaced by the new one. More complex operations, such as appending data to an existing sheet or adding a new one, require additional coding.

Step 3. Execute the Script

Before you run the script, make sure you do the following:

- Replace

credentials.jsonwith the real path to your JSON keys; - Replace

Import CSV exampleswith the name of your Google Spreadsheet; - Replace

filename.csvwith the path to the local CSV file you want to import.

Run the script after you've performed these steps. If you did everything correctly, you should see the changes in your Google Sheets document. If you encounter any errors, check the Python log messages as they can provide useful hints for troubleshooting.

Wrap Up

In this tutorial, we've explored various methods to import a CSV file into Google Sheets:

- With n8n, you can automate the entire process and import files from the disk, from a URL, and from numerous other sources without any coding. On top of that, with n8n you can seamlessly add pre-processing and data-cleaning steps;

- While the native Google interface offers easy import, the process is completely manual but still a good choice for a one-time import of relatively small data;

- You can also import CSV files programmatically using Google App Script or common programming languages such as Python. Despite the simplicity offered by ready-made libraries, this method requires more expertise when the import logic becomes complex.

What’s next?

n8n natively supports multiple format conversions. Take a look at some examples:

Whether you work alone, in a small team, or in a large enterprise with n8n, your options are not limited. Hundreds of systems can be integrated via built-in nodes, making these integrations accessible to all n8n users.

Choose from our cloud plans to get started right away, or explore the powerful features of the Enterprise edition, trusted by many large corporations.

Share your thoughts, experiences, and questions in our Community forum.